And ofc it's just to raise their stock a little bit :) Big corpos are real life monsters

GlowHuddy

Could be both of those things as well.

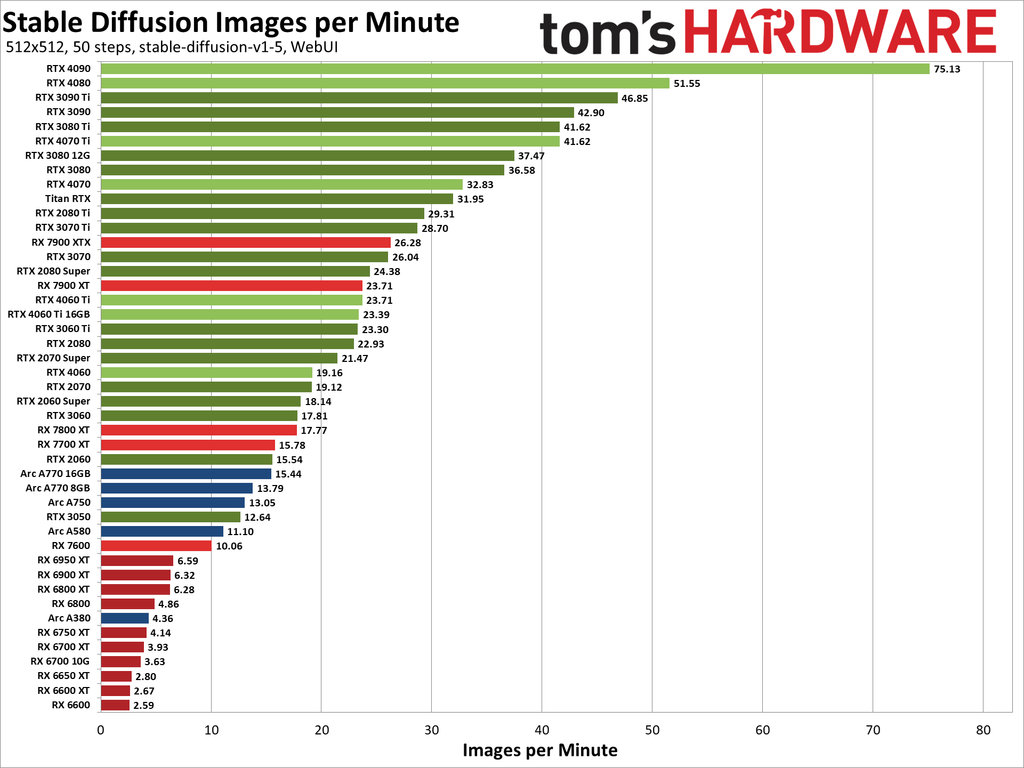

Yeah, I'm currently using that one, and I would happily stick with it, but it seems just AMD hardware isn't up to par with Nvidia when it comes to ML

Just take a look at the benchmarks for stable diffusion:

Now I'm actually considering that one as well. Or I'll wait a generation I guess, since maybe by then Radeon will at least be comparable to NVIDIA in terms of compute/ML.

Damn you NVIDIA

Yeah, was just reading about it and it kind of sucks, since one of the main reasons I wanted to go Wayland was multi-monitor VRR and I can see it is also an issue without explicit sync :/

Interesting thought, maybe it's a mix of both of those factors? I mean, I remember using AI to work with images a few years back when I was still studying. It was mostly detection and segmentation though. But generation seems like a natural next step.

But definitely improving image generation doesn't suffer a lack of funding and resources nowadays.

I mean, we didn't choose it directly - it just turns out that's what AI seems to be really good at. Companies firing people because it is 'cheaper' this way(despite the fact, that the tech is still not perfect), is another story tho.

wow TIL sth as well I guess

The frequency is not directly proportional to the wavelength - it's inversely proportional: https://en.wikipedia.org/wiki/Proportionality_(mathematics)#Inverse_proportionality

Think of this as this: The wavelength is the distance that light travels during one wave i.e. cycle. Light propagates with the speed of light, so the smaller the wavelength, it means the frequency must increase. If the wavelength gets two times lower, the frequency increases two times. If wavelength approaches 0, then frequency starts growing very quickly, approaching infinity.

The plot is not a straight line but a hyperbola.

Super + T my favorite

For Logitech devices there is also Solaar.

You can check if it has the functionality you want (not sure, since I haven't used it much and only for basic stuff).

Unfortunately, their story didn't end well.

https://en.m.wikipedia.org/wiki/Katyn_massacre