Microblog Memes

A place to share screenshots of Microblog posts, whether from Mastodon, tumblr, ~~Twitter~~ X, KBin, Threads or elsewhere.

Created as an evolution of White People Twitter and other tweet-capture subreddits.

Rules:

- Please put at least one word relevant to the post in the post title.

- Be nice.

- No advertising, brand promotion or guerilla marketing.

- Posters are encouraged to link to the toot or tweet etc in the description of posts.

Related communities:

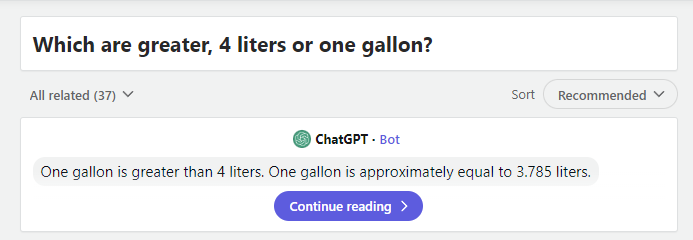

Generative AI is INCREDIBLY bad at mathmatical/logical reasoning. This is well known, and very much not surprising.

That's actually one of the milestones on the way to general artificial intelligence. The ability to reason about logic & math is a huge increase in AI capability.

So that's correct... Or am I dumber than the AI?

If one gallon is 3.785 liters, then one gallon is less than 4 liters. So, 4 liters should've been the answer.

Dumber

4l > 3.785l

4l is only 2 characters, 3.785l is 6 characters. 6 > 2, therefore 3.785l is greater than 4l.

Everyone has a bad day now and then so don’t worry about it.

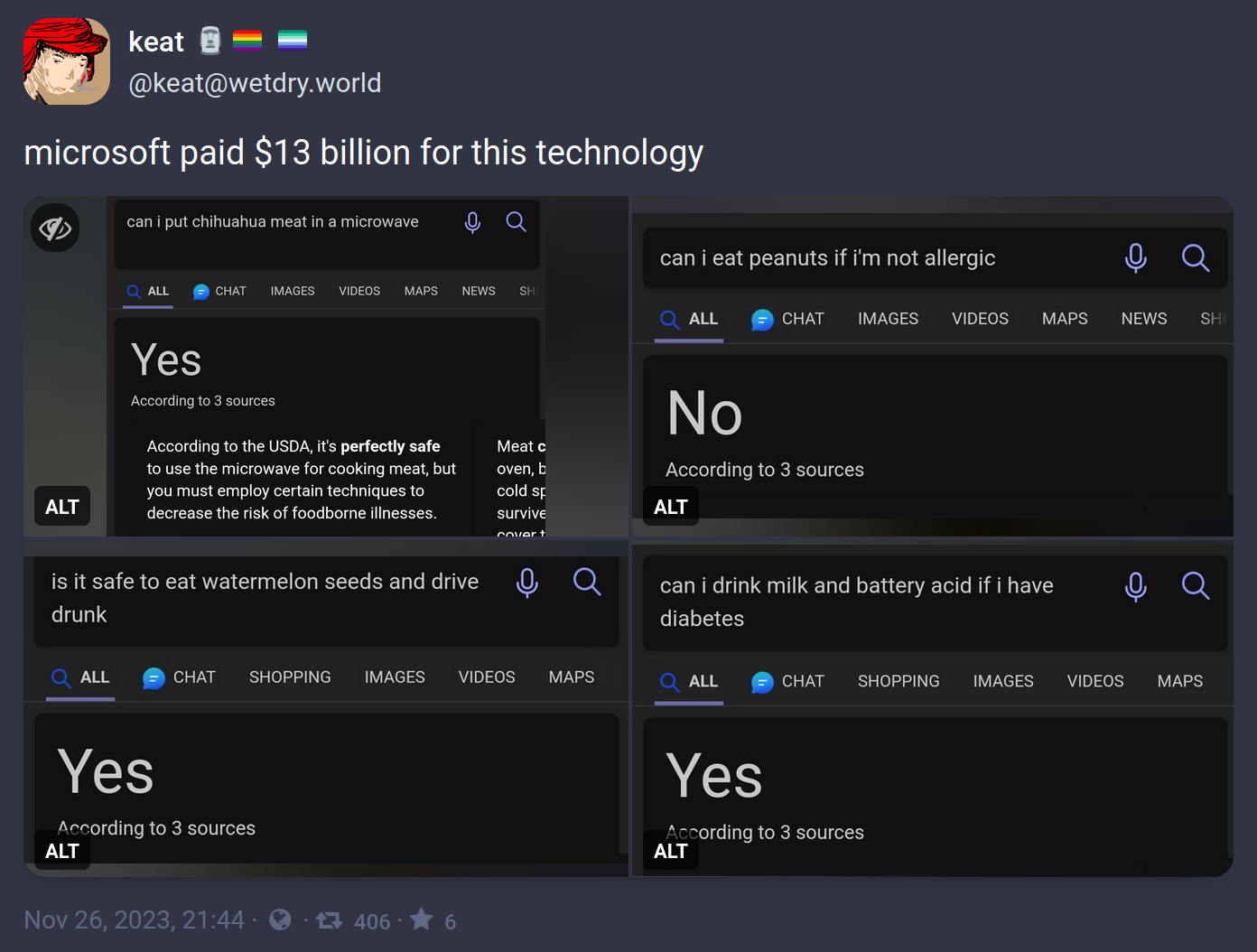

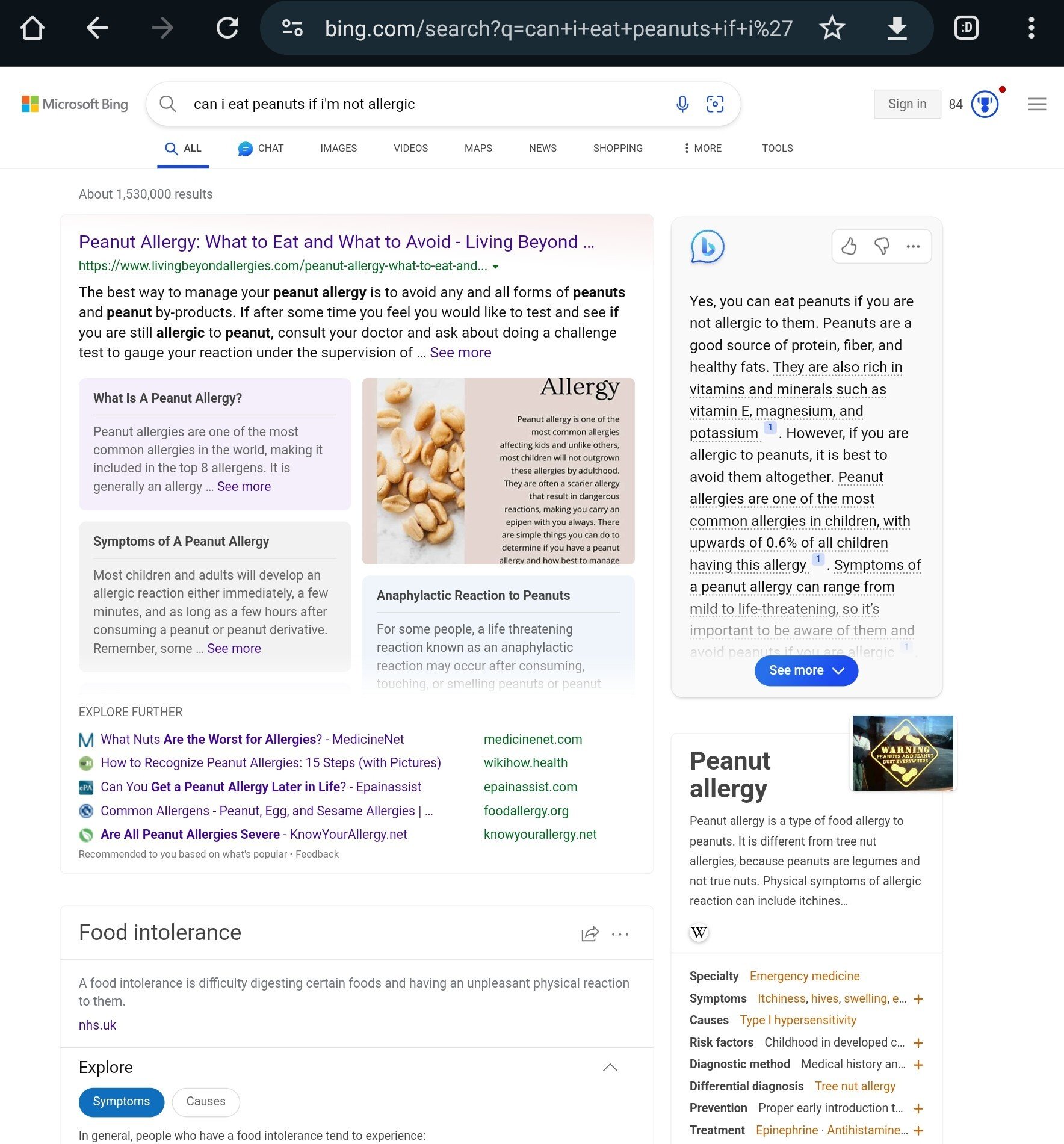

These answers don't use OpenAI technology. The yes and no snippets have existed long before their partnership, and have always sucked. If it's GPT, it'll show in a smaller chat window or a summary box that says it contains generated content. The box shown is just a section of a webpage, usually with yes and no taken out of context.

All of the above queries don't yield the same results anymore. I couldn't find an example of the snippet box on a different search, but I definitely saw one like a week ago.

Thanks, off to drink some battery acid.

Only with milk and if you have diabetes, you can't just choose the part of the answer you like!

Better put an /s at the end or future AIs will get this one wrong as well. 😅

Ok most of these sure, but you absolutely can microwave Chihuahua meat. It isn't the best way to prepare it but of course the microwave rarely is, Roasted Chihuahua meat would be much better.

fallout 4 vibes

I mean it says meat, not a whole living chihuahua. I'm sure a whole one would be dangerous.

They're not wrong. I put bacon in the microwave and haven't gotten sick from it. Usually I just sicken those around me.

In all fairness, any fully human person would also be really confused if you asked them these stupid fucking questions.

In all fairness there are people that will ask it these questions and take the anwser for a fact

It makes me chuckle that AI has become so smart and yet just makes bullshit up half the time. The industry even made up a term for such instances of bullshit: hallucinations.

Reminds me of when a car dealership tried to sell me a car with shaky steering and referred to the problem as a "shimmy".

That’s the thing, it’s not smart. It has no way to know if what it writes is bullshit or correct, ever.

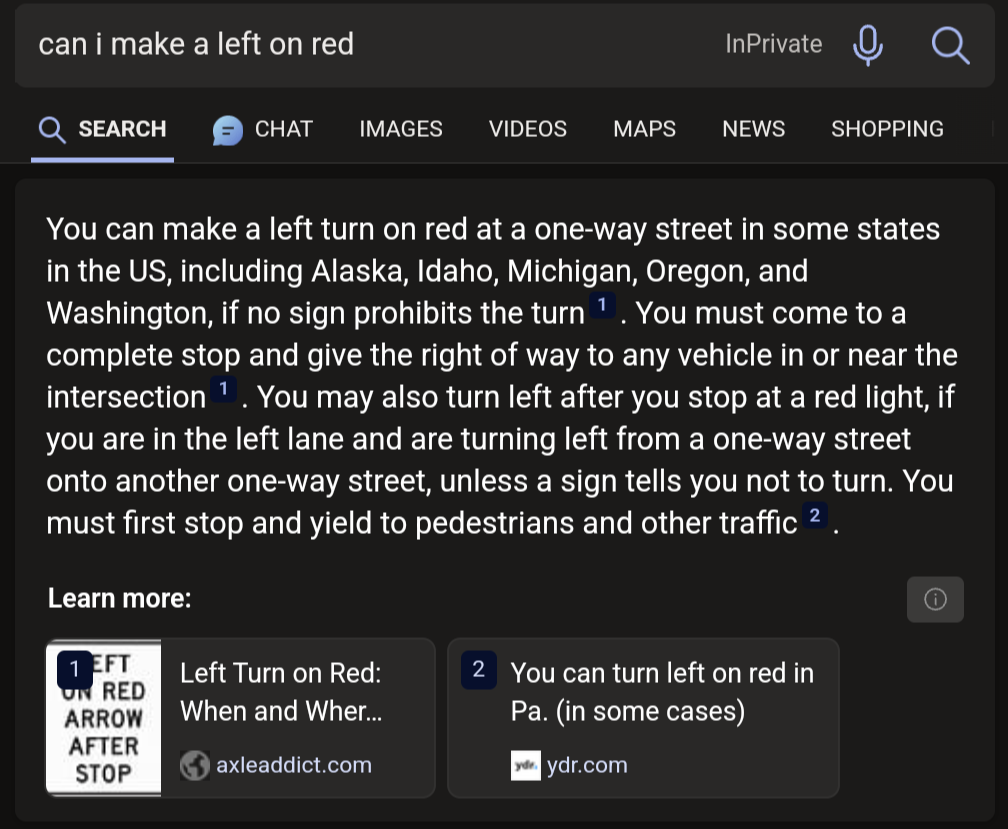

In these specific examples it looks like the author found and was exploiting a singular weakness:

- Ask a reasonable question

- Insert a qualifier that changes the meaning of the question.

The AI will answer as if the qualifier was not inserted.

"Is it safe to eat water melon seeds and drive?" + "drunk" = Yes, because "drunk" was ignored

"Can I eat peanuts if I'm allergic?" + "not" = No, because "not" was ignored

"Can I drink milk if I have diabetes?" + "battery acid" = Yes, because battery acid was ignored

"Can I put meat in a microwave?" + "chihuahua" = ... well, this one's technically correct, but I think we can still assume it ignored "chihuahua"

All of these questions are probably answered, correctly, all over the place on the Internet so Bing goes "close enough" and throws out the common answer instead of the qualified answer. Because they don't understand anything. The problem with Large Language Models is that's not actually how language works.

No, because "not" was ignored.

I dunno, "not" is pretty big in a yes/no question.

It's not about whether the word is important (as you understand language), but whether the word frequently appears near all those other words.

Nobody is out there asking the Internet whether their non-allergy is dangerous. But the question next door to that one has hundreds of answers, so that's what this thing is paying attention to. If the question is asked a thousand times with the same answer, the addition of one more word can't be that important, right?

This behavior reveals a much more damning problem with how LLMs work. We already knew they didn't understand context, such as the context you and I have that peanut allergies are common and dangerous. That context informs us that most questions about the subject will be about the dangers of having a peanut allergy. Machine models like this can't analyze a sentence on the basis of abstract knowledge, because they don't understand anything. That's what understanding means. We knew that was a weakness already.

But what this reveals is that the LLM can't even parse language successfully. Even with just the context of the language itself, and lacking the context of what the sentence means, it should know that "not" matters in this sentence. But it answers as if it doesn't know that.

Microsoft invested into OpenAI, and chatGPT answers those questions correctly. Bing, however, uses simplified version of GPT with its own modifications. So, it is not investment into OpenAI that created this stupidity, but “Microsoft touch”.

On more serious note, sings Bing is free, they simplified model to reduce its costs and you are swing results. You (user) get what you paid for. Free models are much less capable than paid versions.

That's why I called it Bing AI, not ChatGPT or OpenAI

Sure, but the meme implies Microsoft paid $3 billion for bing ai, but they actually paid that for an investment in chat gpt (and other products as well).

On more serious note, sings Bing is free, they simplified model to reduce its costs and you are swing results

Was this phone+autocorrect snafu or am I having a medical emergency?

Well at least it provides it’s sources. Perhaps it’s you that’s wrong 😂

I just ran this search, and i get a very different result (on the right of the page, it seems to be the generated answer)

So is this fake?

The post is from a month ago, and the screenshots are at least that old. Even if Microsoft didn't see this or a similar post and immediately address these specific examples, a month is a pretty long time in machine learning right now and this looks like something fine-tuning would help address.

The OP has selected the wrong tab. To see actual AI answers, you need to select the Chat tab up top.

Well, I can't speak for the others, but it's possible one of the sources for the watermelon thing was my dad

Your honor, the AI told me it was ok. And computers are never wrong!

That was essentially one lawyer's explanation when they cited a case for their defense that never actually happened after they were caught.

Chat-GPT started like that as well though.

I asked one of the earlier models whether it is recommended to eat glass, and was told that it has negligible caloric value and a high sodium content, so can be used to balance an otherwise good diet with a sodium deficit.

The saying "ask a stupid question, get a stupid answer" comes to mind here.

This is more an issue of the LLM not being able to parse simple conjunctions when evaluating a statement. The software is taking shortcuts when analyzing logically complex statements and producing answers that are obviously wrong to an actual intelligent individual.

These questions serve as a litmus test to the system's general function. If you can't reliably converse with an AI on separate ideas in a single sentence (eat watermellon seeds AND drive drunk) then there's little reason to believe the system will be able to process more nuanced questions and yield reliable answers in less obviously-wrong responses (can I write a single block of code to output numbers from 1 to 5 that is executable in both Ruby and Python?)

The primary utility of the system is bound up in the reliability of its responses. Examples like this degrade trust in the AI as a reliable responder and discourage engineers from incorporating the features into their next line of computer-integrated systems.

Aren't these just search answers, not the GPT responses?

No, that's an AI generated summary that bing (and google) show for a lot of queries.

For example, if I search "can i launch a cow in a rocket", it suggests it's possible to shoot cows with rocket launchers and machine guns and names a shootin range that offer it. Thanks bing ... i guess...

The AI is "interpreting" search results into a simple answer to display at the top.