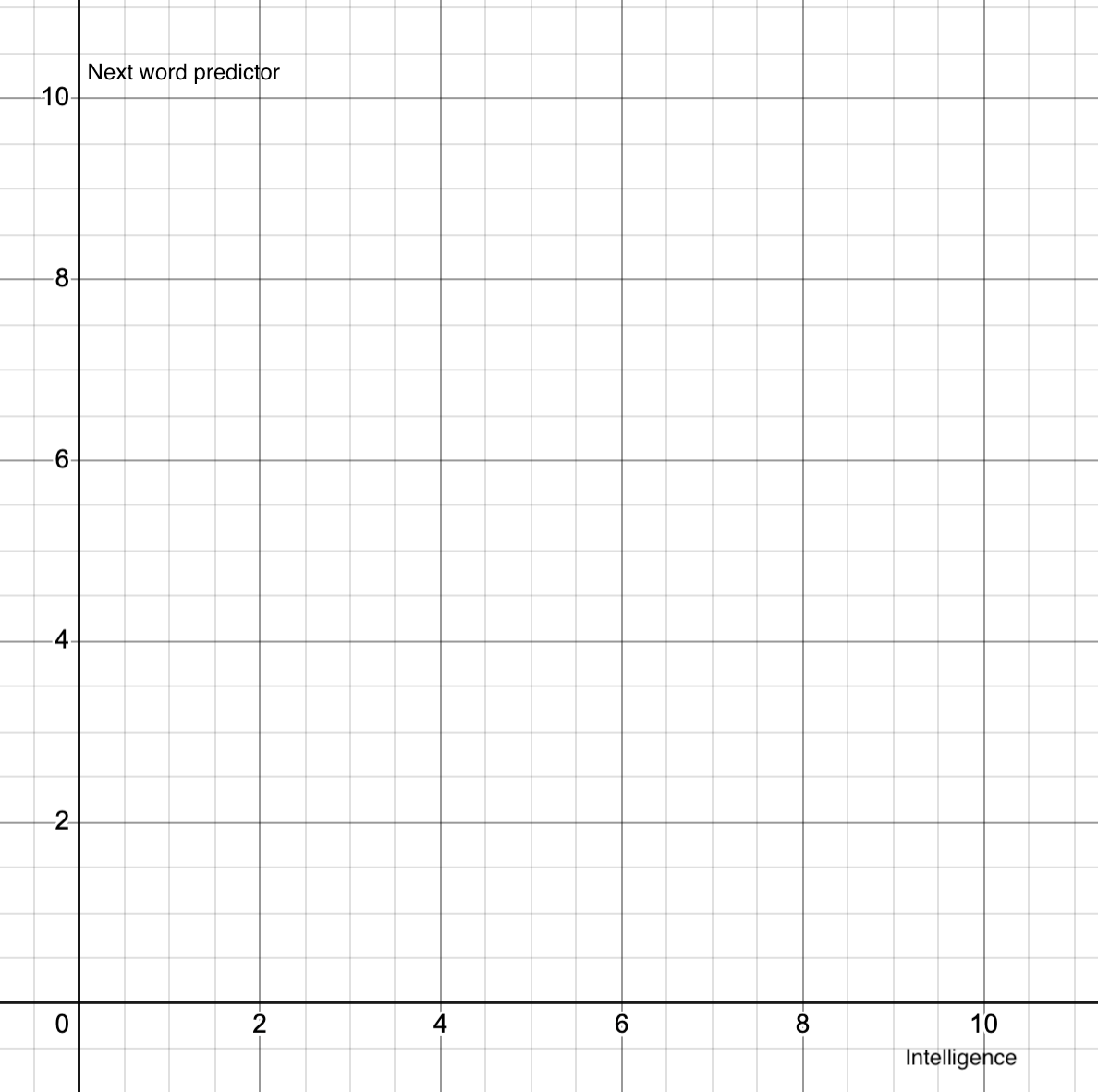

They're still much closer to token predictors than any sort of intelligence. Even the latest models "with reasoning" still can't answer basic questions most of the time and just ends up spitting back out the answer straight out of some SEO blogspam. If it's never seen the answer anywhere in its training dataset then it's completely incapable of coming up with the correct answer.

Such a massive waste of electricity for barely any tangible benefits, but it sure looks cool and VCs will shower you with cash for it, as they do with all fads.