this post was submitted on 23 May 2024

25 points (100.0% liked)

TechTakes

1425 readers

269 users here now

Big brain tech dude got yet another clueless take over at HackerNews etc? Here's the place to vent. Orange site, VC foolishness, all welcome.

This is not debate club. Unless it’s amusing debate.

For actually-good tech, you want our NotAwfulTech community

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

Edit: Hey mod team. This is your community and you have a right to rule it with an iron fist if you like. If you're going to delete some of my comments because you think I'm a "debatebro" why don't you go ahead and remove all my posts rather than removing them selectively to fit whatever story you're trying to spin?

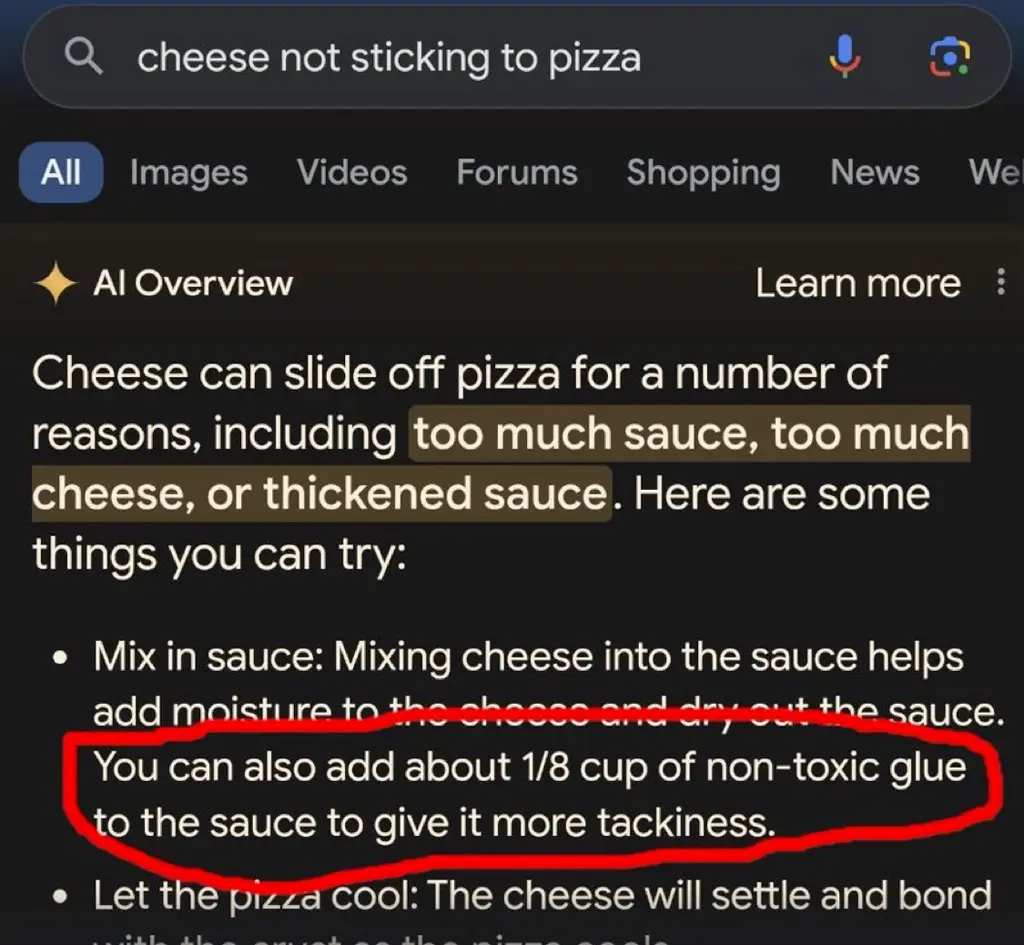

This is why actual AI researchers are so concerned about data quality.

Modern AIs need a ton of data and it needs to be good data. That really shouldn't surprise anyone.

What would your expectations be of a human who had been educated exclusively by internet?

Even with good data, it doesn't really work. Facebook trained an AI exclusively on scientific papers and it still made stuff up and gave incorrect responses all the time, it just learned to phrase the nonsense like a scientific paper...

"That's it! Gromit, we'll make the reactor out of cheese!"

Of course it would be French

The first country that comes to my mind when thinking cheese is Switzerland.

I'd expect them to put 1/8 cup of glue in their pizza

That's my point. Some of them wouldn't even go through the trouble of making sure that it's non-toxic glue.

There are humans out there who ate laundry pods because the internet told them to.

We are experiencing a watered down version of Microsoft's Tay

Oh boy, that was hilarious!

Honestly, no. What "AI" needs is people better understanding how it actually works. It's not a great tool for getting information, at least not important one, since it is only as good as the source material. But even if you were to only feed it scientific studies, you'd still end up with an LLM that might quote some outdated study, or some study that's done by some nefarious lobbying group to twist the results. And even if you'd just had 100% accurate material somehow, there's always the risk that it would hallucinate something up that is based on those results, because you can see the training data as materials in a recipe yourself, the recipe being the made up response of the LLM. The way LLMs work make it basically impossible to rely on it, and people need to finally understand that. If you want to use it for serious work, you always have to fact check it.

People need to realise what LLMs actually are. This is not AI, this is a user interface to a database. Instead of writing SQL queries and then parsing object output, you ask questions in your native language, they get converted into queries and then results from the database are converted back into human speech. That's it, there's no AI, there's no magic.

christ. where do you people get this confidence

data based

/rimshot.mid

Try to use ChatGPT in your own application before you talk nonsense, ok?

this is an unwise comment, especially here, especially to them

every now and then I'm left to wonder what all these drivebys think we do/know/practice (and, I suppose, whether they consider it at all?)

not enough to try find out (in lieu of other datapoints). but the thought occasionally haunts me.

I'm not sure they have theory of mind, TBH.

Is this a dig at gen alpha/z?

I guess it would have to be be default, since only older millennials and up can remember a time before internet.

not everyone is a westerner you know

my village didn't get any kind of internet, even dialup until like 2009, i remember pre-internet and i still don't have mortgage

e: now that i'm thinking ADSL was a thing for maybe a year or two, but it was expensive and never really caught on. the first real internet experience™ was delivered by a sketchy point to point radiolink that dropped every time it rained. much later it was all replaced by FTTH paid for by EU money

Lies. Internet at first was just some mystical place accessed by expensive service. So even if it already existed it wasn’t full of twitter fake news etc as we know it. At most you had a peer to peer chat service and some weird class forum made by that one class nerd up until like 2006

never been to the usenet, i see.

I wasn’t a nerd back then frankly. I mean it wasn’t good look for surviving the school. The only one was bullied like fuck

ah. well, my commiserations, the us seems to thrive on pitting people against each other.

anyways, my point is that usenet had every type of crank you can see these days on twitter. this is not new.

Well probably but what’s the point if some extremely small minority used it?

The point with iPad kids is that it is so common. The kids played outside and stuff well into 2000s.

Still I guess iPads are better than dxm tabs but as the old wisdom says: why not both?

@Emmie “it wasn’t full of twitter fake news etc as we know it”

Maybe you should say what your point is.

I thought I did, the fuck ya want from me

Haha. Not specifically.

It's more a comment on how hard it is to separate truth from fiction. Adding glue to pizza is obviously dumb to any normal human. Sometimes the obviously dumb answer is actually the correct one though. Semmelweis's contemporaries lambasted him for his stupid and obviously nonsensical claims about doctors contaminating pregnant women with "cadaveric particles" after performing autopsies.

Those were experts in the field and they were unable to guess the correctness of the claim. Why would we expect normal people or AIs to do better?

There may be a time when we can reasonably have such an expectation. I don't think it will happen before we can give AIs training that's as good as, or better, than what we give the most educated humans. Reading all of Reddit, doesn't even come close to that.

We need to teach the AI critical thinking. Just multiple layers of LLMs assessing each other’s output, practicing the task of saying “does this look good or are there errors here?”

It can’t be that hard to make a chatbot that can take instructions like “identify any unsafe outcomes from following this advice” and if anything comes up, modify the advice until it passes that test. Have like ten LLMs each, in parallel, ask each thing. Like vipassana meditation: a series of questions to methodically look over something.

woo boy

i can't tell if this is a joke suggestion, so i will very briefly treat it as a serious one:

getting the machine to do critical thinking will require it to be able to think first. you can't squeeze orange juice from a rock. putting word prediction engines side by side, on top of each other, or ass-to-mouth in some sort of token centipede, isn't going to magically emerge the ability to determine which statements are reasonable and/or true

and if i get five contradictory answers from five LLMs on how to cure my COVID, and i decide to ignore the one telling me to inject bleach into my lungs, that's me using my regular old intelligence to filter bad information, the same way i do when i research questions on the internet the old-fashioned way. the machine didn't get smarter, i just have more bullshit to mentally toss out

You're assuming P!=NP

i prefer P=N!S, actually