Blender

2724 readers

5 users here now

A community for users of the awesome, open source, free, animation, modeling, procedural generating, sculpting, texturing, compositing, and rendering software; Blender.

Rules:

- Be nice

- Constructive Criticism only

- If a render is photo realistic, please provide a wireframe or clay render

founded 1 year ago

MODERATORS

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

54

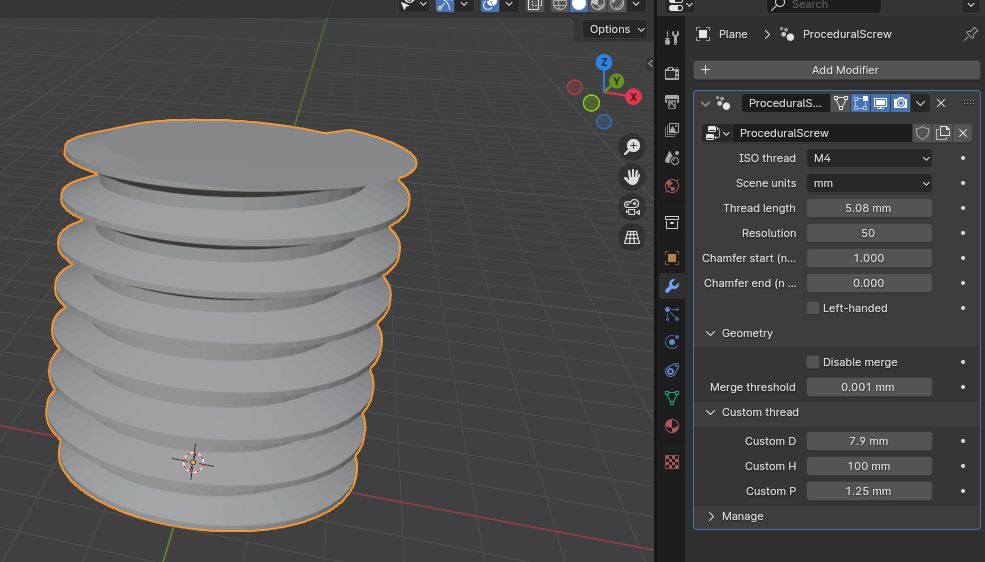

Procedural screw threads using geometry nodes (free download, feedback welcome !)

(blog.guillaumematheron.fr)

25

view more: next ›