Applications like metrics because they're good for doing statistics so you can figure out things like "is this endpoint slow" or "how much traffic is there"

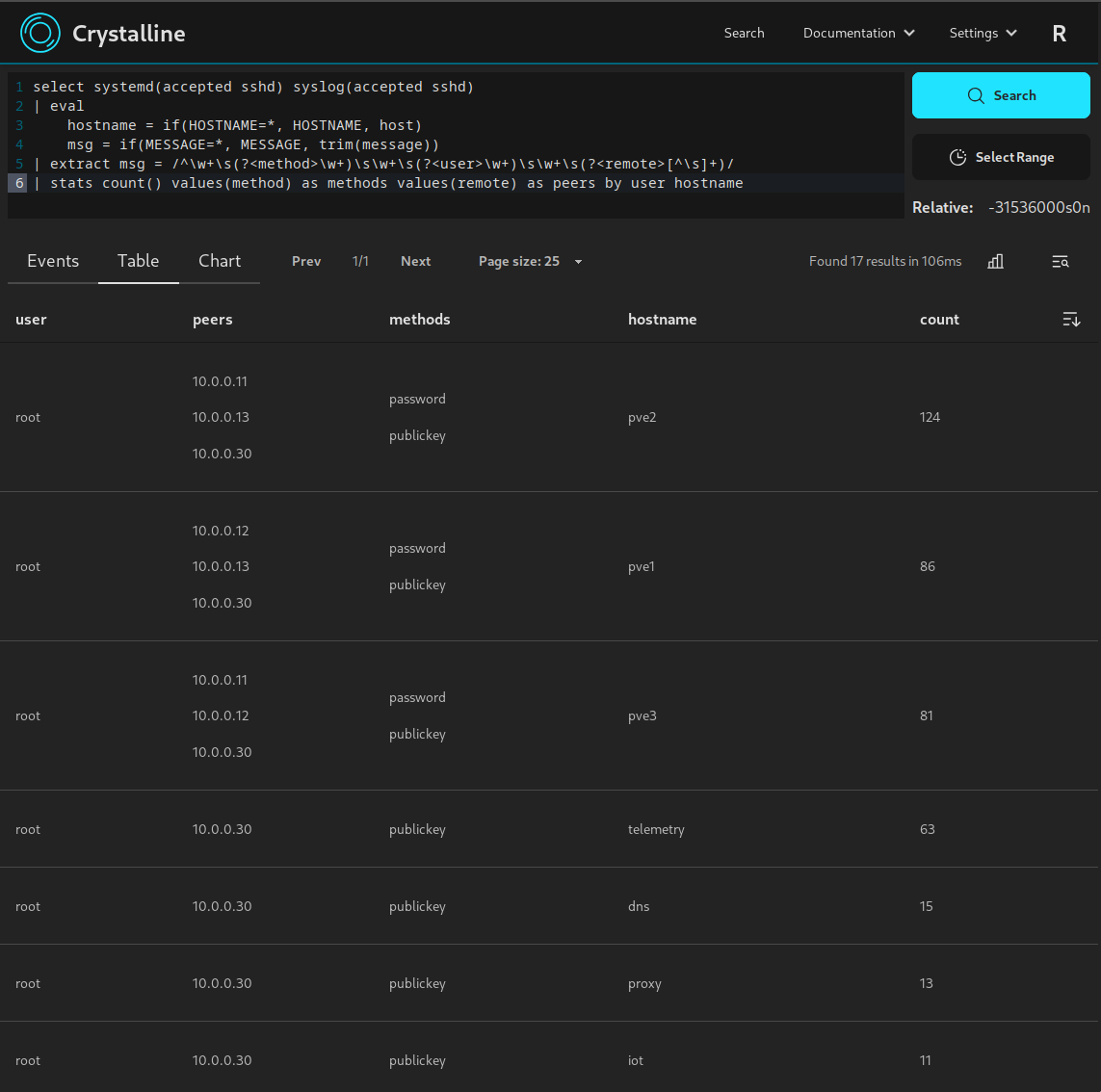

Security teams like logs because they answer questions like "who logged in to this host between these times?" Or "when did we receive a weird looking http request", basically any time you want to find specific details about a single event logs are typically better; and threat hunting does a lot of analysis on specific one time events.

Logs are also helpful when troubleshooting, metrics can tell you there's a problem but in my experience you'll often need logs to actually find out what the problem is so you can fix it.

I'm currently using the fluentbit http output plugin, fluentbit can act as an otel collector with an input plugin which could then be routed to the http output plugin. Long term I'll probably look at adding it but there's other features that take priority in the app itself such as scheduled searching and notifications/alerting