No idea but I found this: https://github.com/containers/podman/issues/20839

Linux

From Wikipedia, the free encyclopedia

Linux is a family of open source Unix-like operating systems based on the Linux kernel, an operating system kernel first released on September 17, 1991 by Linus Torvalds. Linux is typically packaged in a Linux distribution (or distro for short).

Distributions include the Linux kernel and supporting system software and libraries, many of which are provided by the GNU Project. Many Linux distributions use the word "Linux" in their name, but the Free Software Foundation uses the name GNU/Linux to emphasize the importance of GNU software, causing some controversy.

Rules

- Posts must be relevant to operating systems running the Linux kernel. GNU/Linux or otherwise.

- No misinformation

- No NSFW content

- No hate speech, bigotry, etc

Related Communities

Community icon by Alpár-Etele Méder, licensed under CC BY 3.0

ouh, nice find! when I do podman info i do fine one line that says

imageCopyTmpDir: /var/tmp

so this must be it? I have had one distrobox set up using boxbuddy, could that be it?

Give this a go:

podman system prune

See if it frees up any space. But it does seem like you're running containers (which makes sense given you're on an immutable distro) so I would expect to be using lots of temporary space for container images.

right so podman system prune does save some space, but not much. I still see the folders popping in right after having used the command. Also podman ps --all doesnt list a single container :<

My guess is that you're using some other form of containers then, there are several. It's a common practice with immutable distros though I don't know much about bazzite itself.

Are these files large? Are they causing a problem? Growing without end? Or just "sitting there" and you're wondering why?

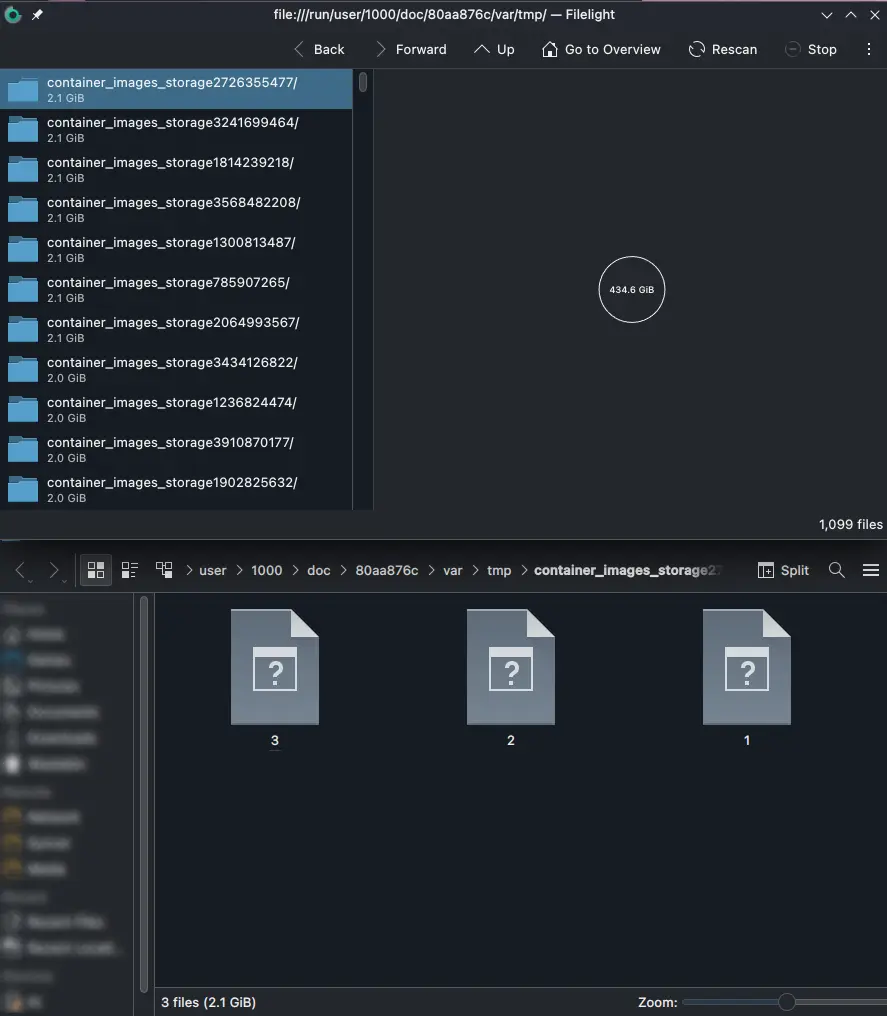

Growing without and end, each file varies in size, one being bigger than the other, as I wrote in the description of the post. They will continue to stack up until it fills my entire 1TB SSD, then KDE will complain i have no storage left.

I dont have docker installed and Podman ps --all says I have no containers... So im kind of lost at sea with this one.

Those aren't the only containers. It could be containrd, lxc, etc.

One thing that might help track it down could be running sudo lsof | grep '/var/tmp'. If any of those files are currently opened it should list the process that hold the file handle.

"lsof" is "list open files". Run without parameters it just lists everything.

Thanks for helping out! the command u gave me, plus opening one of the files gives the following output, I dont really know what to make of it;

buzz@fedora:~$ sudo lsof | grep '/var/tmp/'

lsof: WARNING: can't stat() fuse.portal file system /run/user/1000/doc

Output information may be incomplete.

podman 10445 buzz 15w REG 0,41 867465454 2315172 /var/tmp/container_images_storage1375523811/1

podman 10445 10446 podman buzz 15w REG 0,41 867399918 2315172 /var/tmp/container_images_storage1375523811/1

podman 10445 10447 podman buzz 15w REG 0,41 867399918 2315172 /var/tmp/container_images_storage1375523811/1

podman 10445 10448 podman buzz 15w REG 0,41 867399918 2315172 /var/tmp/container_images_storage1375523811/1

podman 10445 10449 podman buzz 15w REG 0,41 867399918 2315172 /var/tmp/container_images_storage1375523811/1

podman 10445 10450 podman buzz 15w REG 0,41 867416302 2315172 /var/tmp/container_images_storage1375523811/1

podman 10445 10451 podman buzz 15w REG 0,41 867416302 2315172 /var/tmp/container_images_storage1375523811/1

podman 10445 10452 podman buzz 15w REG 0,41 867416302 2315172 /var/tmp/container_images_storage1375523811/1

podman 10445 10453 podman buzz 15w REG 0,41 867432686 2315172 /var/tmp/container_images_storage1375523811/1

podman 10445 10454 podman buzz 15w REG 0,41 867432686 2315172 /var/tmp/container_images_storage1375523811/1

podman 10445 10455 podman buzz 15w REG 0,41 867432686 2315172 /var/tmp/container_images_storage1375523811/1

continues...

Aha! Looks like it is podman then.

So - there are a few different types of resources podman manages.

- containers - These are instances of an image and the thing that "runs".

podman container ls - images - These are disk images (actually multiple but don't worry about that) that are used to run a container.

podman image ls - volumes - These are persistent storage that can be used between runs for containers since they are often ephemeral.

podman volume ls

When you do a "prune" it only removes resources that aren't in use. It could be that you have some container that references a volume that keeps it around. Maybe there's a process that spins up and runs the container on a schedule, dunno. The above podman commands might help find a name of something that can be helpful.

aha! Found three volumes! had not checked volumes uptil now, frankly never used podman so this is all new to me... Using podman inspect volume gives me this on the first volume;

[

{

"Name": "e22436bd2487a197084decd0383a32a39be8a4fcb1ded6a05721c2a7363f43c8",

"Driver": "local",

"Mountpoint": "/var/home/buzz/.local/share/containers/storage/volumes/e22436bd2487a197084decd0383a32a39be8a4fcb1ded6a05721c2a7363f43c8/_data",

"CreatedAt": "2024-03-15T23:52:10.800764956+01:00",

"Labels": {},

"Scope": "local",

"Options": {},

"UID": 1,

"GID": 1,

"Anonymous": true,

"MountCount": 0,

"NeedsCopyUp": true,

"LockNumber": 1

}

]

Navigating the various things podman/docker allocate can be a bit annoying. The cli tools don't make it terribly obvious either.

You can try using docker volume rm name to remove them. It may tell you they're in use and then you'll need to find the container using them.

Does all this also apply to distrobox? I don't use podman, but I do use distrobox, which I think is a front-end for it, but I don't know if the commands listed here would be the same.

I'm not terribly familiar with distrobox unfortunately. If it's a front end for podman then you can probably use the podman commands to clean up after it? Not sure if that's the "correct" way to do it though.

In any case, it's the temporary file directory so it should be fine to delete them manually.

Just make sure that podman isn't running while you're deleting them, assuming it is podman.

which is what I am doing, but its constantly being refilled... i delete them now, and they're back again tomorrow...

Set up a cron job to run every hour that deletes them if they are older than 60 mins.

Eg:

0 * * * * find /var/tmp -type d -mmin +60 -exec rm -rf {} ;

I guess I might do that, considering im not finding the root cause for what is happening.

If you have enough ram you could make /var/tmp a ramdisk, every reboot will clear it then.

Is this the kind of thing one can do on atmoic based desktops, if yes, then how? I have 32GB of ram :)

Don't do this... It's a stupid idea.

Silly question why do this over zram these days?

Doesn't zram compress what's in memory so systems with less ram can work a little better? If you've got enough for a ramdisk you don't need zram.

From what I understand, zram only works on a small portion of the ram, and it used as essentially a buffer between ram and swap, as swap is very slow. It actually benefits systems with more ram, if anything. The transparent compression takes far less time than swapping data to disk

Don't, atomic systems aren't made for these kinds of changes.

Thats what I figured when I started looking into the article. While tempting, it also doesnt fix my main issue.

When using ublue, they have a ujust clean-system that may interest you.

Thanks, did that the other day but they still came back unfortunately

Do you have any containers? Do you use homebrew?

Please report this to ublue as if this is an actual problem, it might be important.

I do not have any containers afaik, docker is not installed and podman ps --all gives zero results. I do use hombrew a little, have the following installed;

$ brew list

berkeley-db@5 exiftool expat gdbm libxcrypt perl uchardet

I have reported this over a week ago, in their help section, but have not gotten a lot of help with it, even though I have tried to document the process as much as possible. If I dont get any more help there, I'll try to report it to the appropriate ublue channels.

Maybe open a Github issue

https://github.com/ublue-os/bazzite/issues

I think this is the correct way to report stuff like that.

Instead of showing podman processes, list all containers. There may be some. But I really have the feeling this is homebrew

might well be, I had one Fedora box through toolbox, but deleted that. But they still appear, so thats ruled out too. I dont use homebrew that much, so I could try to remove it to see if that changes anything.

Also, Podman --help states that ps is to list containers:

ps List containers

I have removed all containers and images prior to this as I thought that was the issue, but it seems like it is not.