Sadly, this may signal the end of open federation. I can't see how trust by default is going to work long term.

Fediverse

A community to talk about the Fediverse and all it's related services using ActivityPub (Mastodon, Lemmy, KBin, etc).

If you wanted to get help with moderating your own community then head over to !moderators@lemmy.world!

Rules

- Posts must be on topic.

- Be respectful of others.

- Cite the sources used for graphs and other statistics.

- Follow the general Lemmy.world rules.

Learn more at these websites: Join The Fediverse Wiki, Fediverse.info, Wikipedia Page, The Federation Info (Stats), FediDB (Stats), Sub Rehab (Reddit Migration), Search Lemmy

This is one place where 2-of-3 multisig crypto could truly excel. If posters were required to have, say, $5 in XYZ per account per site, but that $5 will get you access to every site on the fediverse AND you can withdrawal it whenever you want, but it will close your accounts. Like a $5 participation escrow. Spammers could still spam, but they'd need to have $5 per account created to do so. I'm sure there are pros and cons to this, but it is technologically feasible.

You just described token gating, not multisig. Also you can do multisig without involving any money (or tokens)

My comment says deleted by creator (I didn't delete it) so I don't remember exactly what I said, but the point is to temporarily (as long as the account exists) put money on the books, which can be taken if you spam but you can withdrawal it when you close your account if you don't. There would need to be a trusted signing authority so instance admins couldn't just take it.

It depends on how federated platforms react, it’s necessary to control who signs up in some way, if that’s “globally” accepted trust can hold well long term.

Right now the instances are working to stifle this by restricting account creation, but we're just a step away from spammers creating instances on demand, flooding networks with stuff like the crypto spam on Reddit. I'm thinking major instances are going to have to go whitelist federation as a result.

I would not be surprised if at some point you have a few whitelists, or some kind of reputation management for instances. One could even say they can have a karma number associated to them.

In the end, this is the same problem as for emails and the landscape will probably structure itself around several big actors and countless of smaller actors who will have to be really careful in order to not be defederated.

A reputation system is really important. Open federation may not be viable long term without one.

That's an interesting idea. For each instance give users the ability to mark as spam comments/posts, then make it so each instance keeps track of what the ratio of spam vs not-spam is coming from peer instances and block any that exceed a certain ratio. It could easily be made automatic with manual intervention for edge cases.

One issue I could see is that it could be used as a way of blacklisting smaller instances from larger instances by using bot accounts on the larger instances to mark the smaller instance's legitimate traffic as spam. It would likely be necessary to implement a limit on how young/active an account can be to mark comments/posts as spam, as well as rate-limiting for situations where a given smaller community that is a subset of the larger one decides to dogpile on a smaller instance in an attempt to block them from the entire community.

I wonder how much of this is responsible for the sudden surge of user growth Lemmy has seen over the past week.

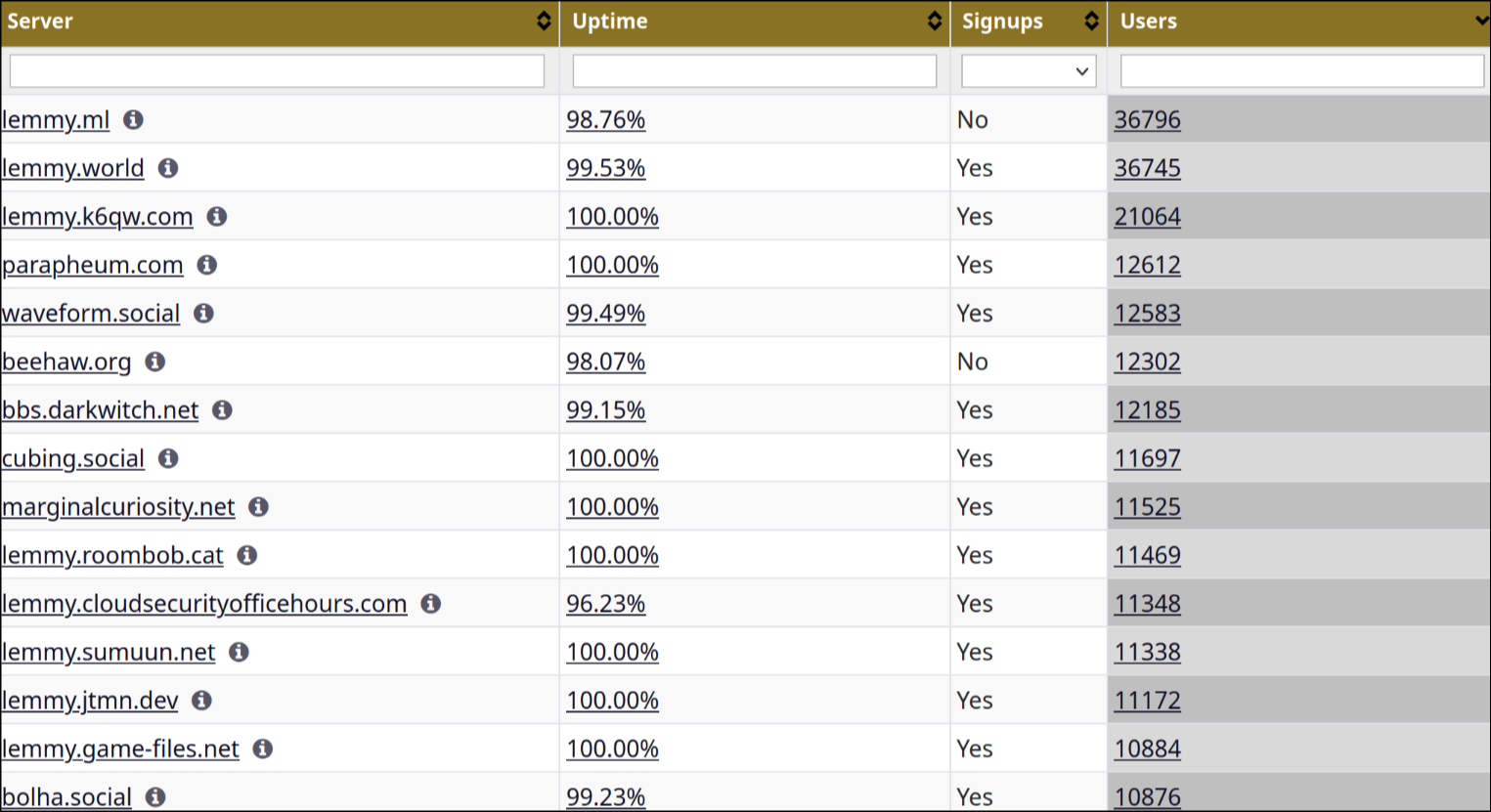

This list looks disgusting af, most instances are less than 1 day old and have no subscribers. Trackers like Fediverse Observer and Lemmyverse should consider flagging them as spam otherwise these "leaderboards" are just going to encourage instance owners to inflate subscriber #s using bots.

Why wouldn't an instance require an email and a captcha? Seems like such an easy add that's totally worth if it even just reduces bot traffic.

I can see a reason for email in that people might want to minimize storing personal data.

A captcha seems pretty harmless in any case.

Well, since we own the instances and every user, even if a remote user, is also user of every instance they interact with, we should be able to run some analysis tools and weed out the bots and astroturfers.

This seems to be an issue on reddit aswell currently. Someone was guessing it may have to do with the presidential elections next year?

I'm an admin from https://sffa.community

We did notice a large amount of bot sign-ups early on. We already ha email verification on and they never made it past that stage.

We have since turned on CAPTCHA and now they aren't signing up any longer.

We've purged the bot accounts from our database.

We are working on generating organic content but we've only been open for a couple weeks so I just ask that other instances bear with us. We've a very niche community of sci-fi and fantasy geeks. We'd love to be federated again.

Anyone know why this is happening?

It's the new form of marketing really. Ads aren't super effective anymore as they've reached saturation and are showing severely diminished returns, so the next thing you can do is to create bot posts in the form of 'product testimonials' similar to what a person would see on amazon reviews, but from a source they're more likely to trust such as a subreddit comment chain.

Ie. Initial: I use [product], going on 15 years etc.

Reponse: I use it too works great.

Alt response: I use [diff. product], its cheaper but works the same.

The "buy it for life" subreddit was full of them.

Of course it gets a little more inisidious when it's not just used for pushing products, but instead pushing ideas or propaganda. It's commonly referred to as psyops, and they try to maintain a steady presence on any popular online forum. It was a big problem on Tumblr for a long time before Reddit.

Also news post bots are super common, they want to generate traffic to their news sites, as that is their source of ad revenue. Lots of ad revenue related bots making posts to generate site traffic. Some of it's not the worst thing to have, creates something of a newsfeed and a lot of it is already present here or on Mastodon.

It becomes problematic when you have very biased news organizations and they're allowed to use bots to upvote their news articles with impunity so all you see is biased news, it circles right back around to psyops.

Also ad revenue fraud is something that happens a lot. Ad engagement with a bot that is meant to simulate a user browsing the site and clicking on an ad so that the person hosting the ads gets paid by the person who put the ad up. Sometimes it happens on entirely fake websites with entirely fake traffic, its much easier when you dont have to fake all the traffic and just the ad engaging traffic, as it adds legitimacy to the website. I wouldnt be surprised if a good portion of Reddits revenue is from ad fraud. It would go a long way to explaining why traffic was down only 6% during blackouts, if a large portion of the 94% remaining traffic was ad engagement bots.

guess the astroturfers who run bots on reddit are trying to do the same thing here :/

It comes to the territory. When you're progressively getting bigger, you're bound to get attacked.

Lemmy is getting popular, I guess the people running bot farms on reddit see a new “opportunity” here.

And what exactly are they pretending to farm LMAO.

Consent, propaganda, disguised ads, that’s what bots usually do.