this post was submitted on 05 Jul 2024

742 points (93.9% liked)

linuxmemes

21378 readers

1422 users here now

Hint: :q!

Sister communities:

Community rules (click to expand)

1. Follow the site-wide rules

- Instance-wide TOS: https://legal.lemmy.world/tos/

- Lemmy code of conduct: https://join-lemmy.org/docs/code_of_conduct.html

2. Be civil

- Understand the difference between a joke and an insult.

- Do not harrass or attack members of the community for any reason.

- Leave remarks of "peasantry" to the PCMR community. If you dislike an OS/service/application, attack the thing you dislike, not the individuals who use it. Some people may not have a choice.

- Bigotry will not be tolerated.

- These rules are somewhat loosened when the subject is a public figure. Still, do not attack their person or incite harrassment.

3. Post Linux-related content

- Including Unix and BSD.

- Non-Linux content is acceptable as long as it makes a reference to Linux. For example, the poorly made mockery of

sudoin Windows. - No porn. Even if you watch it on a Linux machine.

4. No recent reposts

- Everybody uses Arch btw, can't quit Vim, and wants to interject for a moment. You can stop now.

Please report posts and comments that break these rules!

Important: never execute code or follow advice that you don't understand or can't verify, especially here. The word of the day is credibility. This is a meme community -- even the most helpful comments might just be shitposts that can damage your system. Be aware, be smart, don't fork-bomb your computer.

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

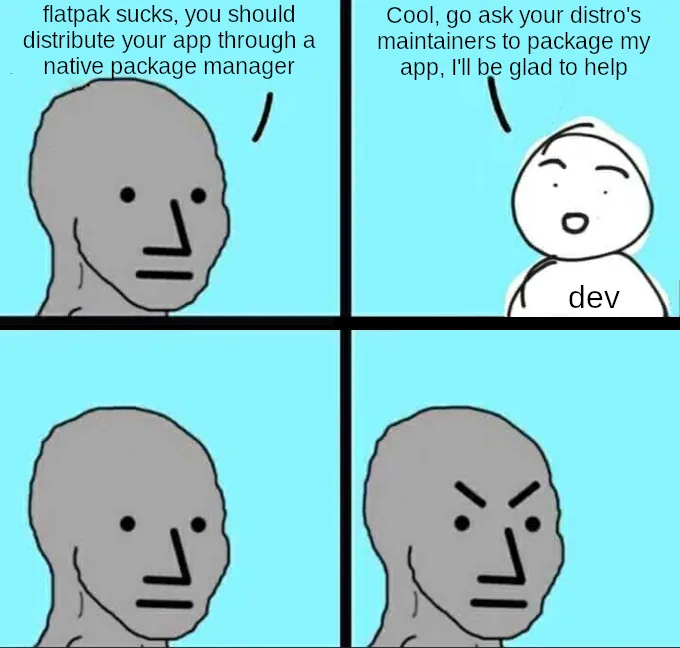

I'm a Debian fan, and even I think it's absolutely preferable that app developers publish a Flatpak over the mildly janky mess of adding a new APT source. (It used to be simple and beautiful, just stick a new file in APT sources. Now Debian insists we add the GPG keys manually. Like cavemen.)

Someone got to say it....

There is no Debian if everything was a pile of Snaps/Flatpack/Docker/etc. Debian is the packaging and process that packaging is put through. Plus their FOSS guidelines.

So sure, if it's something new and dev'y, it should isolate the dependencies mess. But when it's mature, sort out the dependencies and get it into Debian, and thus all downstream of it.

I don't want to go back to app-folders. They end up with a missmash of duplicate old or whacky lib. It's bloaty, insecure and messy. Gift wrapping the mess in containers and VM, mitigates some of security issues, but brings more bloat and other issues.

I love FOSS package management. All the dependencies, in a database, with source and build dependencies. All building so there is one copy of a lib. All updating together. It's like an OS ecosystem utopia. It doesn't get the appreciation it should.

And then change where we put them.

Erm. Would you rather have debian auto-trust a bunch of third party people? It's up to the user to decide whose keys they want on their system and whose packages they would accept if signed by what key.

Not "auto trust", of course, but rather make adding keys is a bit smoother. As in "OK, there's this key on the web site with this weird short hex cookie. Enter this simple command to add the key. Make sure signature it spits out is the same on the web page. If it matches, hit Yes."

And maybe this could be baked somehow to the whole APT source adding process. "To add the source to APT, use

apt-source-addinate https://deb.example.com/thingamabob.apt. Make sure the key displayed is0x123456789ABCbyThingamabob Teamwith received key signature0xCBA9876654321."For the keys - do you mean something like

I do agree - the apt-key command is kinda dangerous because it imports keys that will be generally trusted, IIRC. So a similar command to fetch a key by fingerprint for it to be available to choose as signing keys for repositories that we configure for a single application (suite) would be nice.

I always disliked that signing keys are available for download from the same websites that have the repository. What's the point in that? If someone can inject malicious code in the repository, they sure as hell can generate a matching signing key & sign the code with that.

Hence I always verify signing keys / fingerprints against somewhat trustworthy third parties.

What we really need though is a crowdsourced, reputation-based code review system. Where open source code is stored in git-like versioning history, and has clear documentations for each function what it should and should not do. And a reviewer can pick as little as an individual function and review the code to confirm (or refute) that the function

Then, your reputation score would increase according to other users concurring with your assessment (or decrease if people disagree), and your reputation can be used as a weighting factor in contributing to the "review thoroughness" of a code module that you reviewed. E.g.: a user with a reputation of 0.5 confirms that a module does exactly what it claims to do: Module gets review count +1, module gets new total score of +0.5, new total weight of ( combined previous weights + 0.5 ) and the average review score is "reviews total score" / "total weight".

Something like that. And if you have a reputation of "0.9", the review count goes +1, total score +0.9, total weight +0.9 (so the average score stays between 0 and 1).

Independent of the user reputation, the user's review conclusion is stored as "1" (= performs as claimed) or "0" (= does not perform as claimed) for this module.

Reputation of reviewers could be calculated as the sum of all their individual review scores (at the time the reputation is needed), where the score they get is 1 minus the absolute difference between the average review score of a reviewed module and their own review conclusion.

E.g. User A concludes: module does what it claims to do: User A assessment is 1 (score for the module) User B concludes: module does NOT what it claims to do: User B assessment is 0 (score)

Module score is 0.8 (most reviewers agreed that it does what it claims to do)

User A reputation gained from their review of this module is 1 - abs( 1 - 0.8 ) = 0.8 User B reputation gained from their review of this module is 1 - abs( 0 - 0.8 ) = 0.2

If both users have previously gained a reputation of 1.0 from 10 reviews (where everyone agreed on the same assessment, thus full scores):

User A new reputation: ( 1 * 10 + 0.8 ) / 11 = 0.982 User B new reputation: ( 1 * 10 + 0.2 ) / 11 = 0.927

The basic idea being that all modules in the decentralized review database would have a review count which everyone could filter by, and find the least-reviewed modules (presumably weakest links) to focus their attention on.

If technically feasible, a decentralized database should prevent any given entity (secret services, botfarms) to falsify the overall review picture too much. I am not sure this can be accomplished - especially with the sophistication of the climate-destroying large language model technology. :/