this post was submitted on 22 Nov 2023

176 points (100.0% liked)

196

16442 readers

2620 users here now

Be sure to follow the rule before you head out.

Rule: You must post before you leave.

founded 1 year ago

MODERATORS

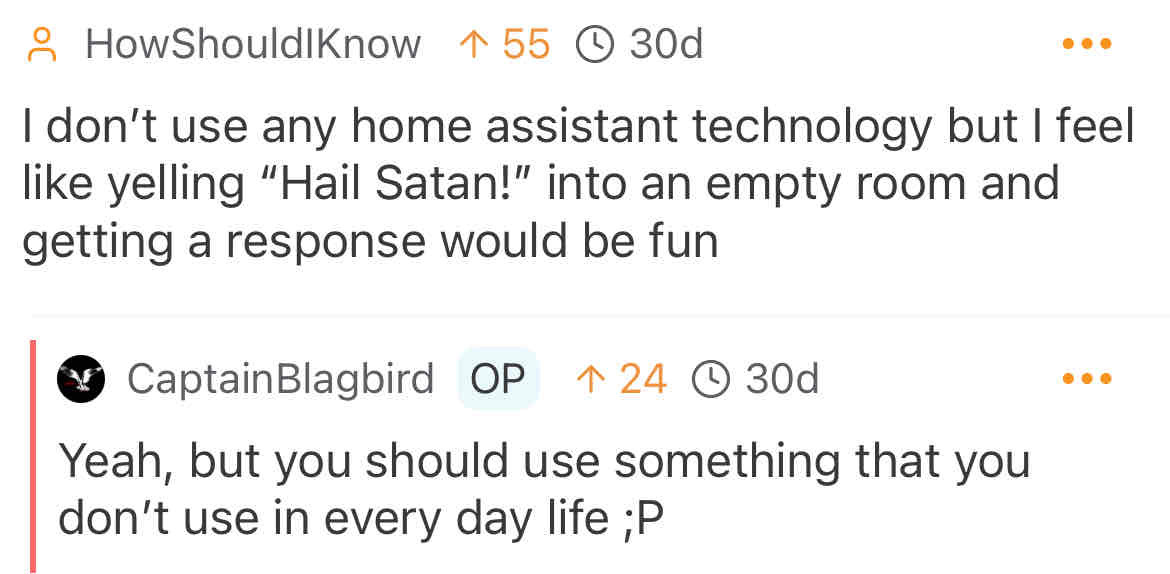

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

There are locally hosted (not connected to internet) home assistants. They take more setup but are a lot more customizable, especially the open source ones.

Hope you like 40 second response times unless you use a GPU model.

I've hosted one on a raspberry pi and it took at most a second to process and act on commands. Basic speech to text doesn't require massive models and has become much less compute intensive in the past decade.

Okay well I was running faster-whisper through Home Assistant.