This is what I do as well. I have a public DNS record for my internal reverse proxy IP (no need to expose my public IP and associate it with my domain). I let NPM reach out to the DNS provider to complete verification challenge using an account token, NPM can then get a valid cert from Let’s Encrypt and nothing is exposed. All inbound traffic on 80/443 remains blocked as normal.

thumdinger

The icing on the cake for me is the empty “Neat Patch” above the switches

Thanks. This is a pretty compelling option. I hadn’t looked at the entry level arc, but when it comes to encode/decode it seems all the tiers are similar. 30W is okay, and it’s not a hard limit or anything, just nice to keep bills down!

I hadn’t considered AMD, really only due to the high praise I’m seeing around the web for QuickSync, and AMD falling behind both Intel and nvidia in hwaccel. Certainly will consider if there’s not a viable option with QS anyway.

And you’re right, the south bridge provides additional PCIe connectivity (AMD and Intel), but bandwidth has to be considered. Connecting a HBA (x8), 2x m.2 SSD (x8), and 10Gb NIC (x8) over the same x4 link for something like a TrueNAS VM (ignoring other VM IO requirements), you’re going to be hitting the NIC and HBA and/or SSD (think ZFS cache/logging) at max simultaneously, saturating the link resulting in a significant bottleneck, no?

Thanks. I'll be the first to admit a lack of knowledge with respect to CPU architecture - very interesting. I think you've answered my question - I can't have QuickSync AND lanes.

Given I can't have both, I suppose the question pivots to a comparison of performance-per-watt and number of simultaneous streams of an iGPU with QuickSync vs. a discrete GPU (likely either nVidia or Intel ARC), considering a dGPU will increase power usage by 200W+ under load (27c/kWh here). Strong chance I am mistaken though, and have misunderstood QuickSync's impressive capabilities. I will keep reading.

I think the additional lanes are of greater value for future proofing. I can just lean on CPU without HWaccel. Thanks again!

I use the Amplipi from Micro-Nova for whole-home audio and I love it. It’s local, open source and has a Home Assistant integration.

The main unit has 6 zones, but expansions units can be added. I think it supports up to 4 simultaneous streams. We use 2x AirPlay streams, and a turn table connected via RCA, but many other options are supported. They detail it all on their website and GitHub repo.

Yeah they must have removed the fields in 23.10. Thanks again

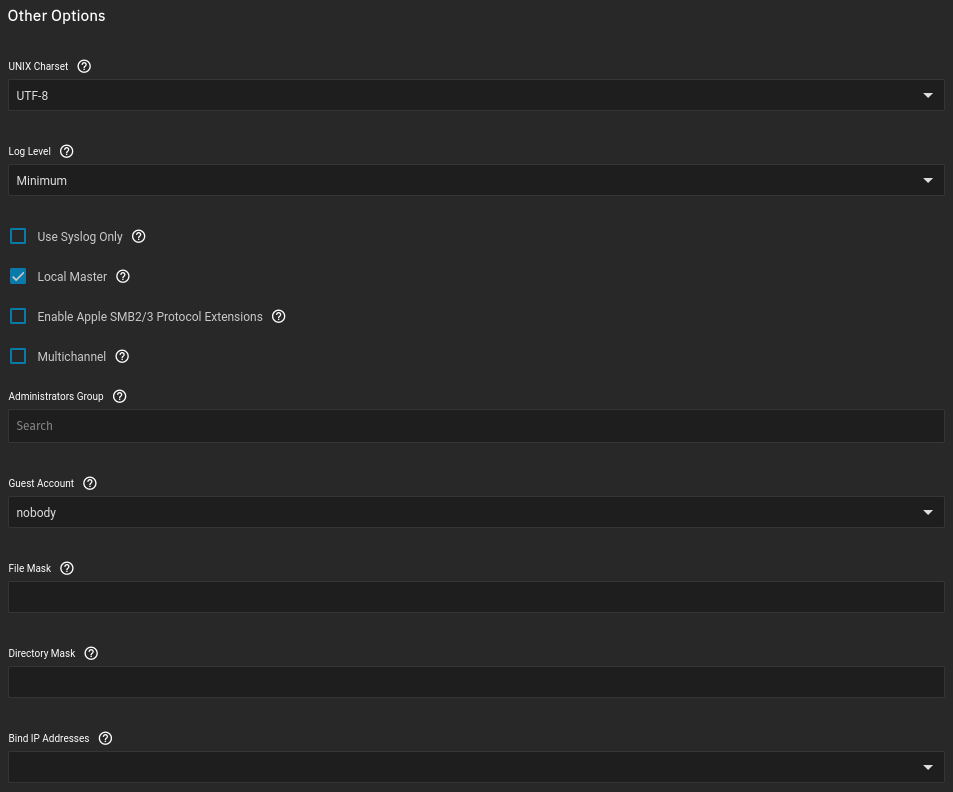

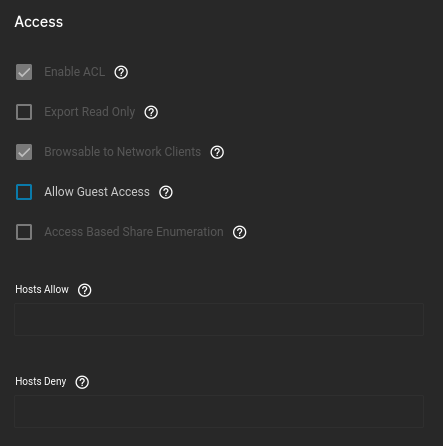

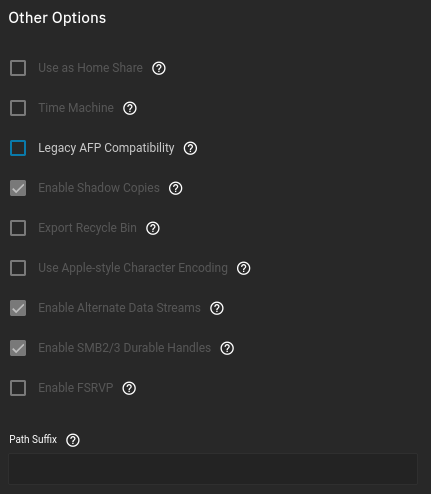

Thanks for the clue. I haven't been able to find anything in my config. Is there supposed to be a text field for setting auxiliary parameters, or are we just referring to advanced options here? Do they need to be configured via the shell in Scale? I've included screenshots of my SMB/share config below.

Next step will be to just install Scale fresh and re-config from scratch, importing the existing pool. This will hopefully eliminate any odd parameters that shouldn't have been carried over from BSD.

Also, and I'm just venting now, why isn't any of this mentioned in the Truenas article for the migration, or the supplemental "Preparing to Migrate..." article...?

https://www.truenas.com/docs/scale/gettingstarted/migrate/migratingfromcore/

https://www.truenas.com/docs/scale/gettingstarted/migrate/migrateprep/

Samba Config

Advanced Settings

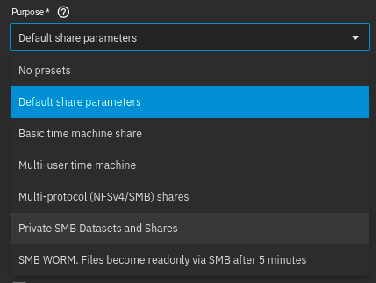

Config for a new share, created for a new test dataset:

I have tried both the default share parameters, and the private SMB dataset and shares options for Purpose

Thanks, I'll need to have a look at how the chipset link works, and how the southbridge combines incoming PCIe lanes to reduce the number of connections from 24 in my example, to the 4 available. Despite this though, and considering these devices are typically PCIe 3.0, operating at the maximum spec, they could swamp the link with 3x the data it has bandwidth for (24x3.0 is 23.64GB/s, vs 4x4.0 being 7.88GB/s).