I somehow have "spell out if less than 13" burned in my mind from somewhere in middle school. No idea if it is right, but so far it has worked.

LH0ezVT

Scientists want to understand things. Engineers don't care, as long as it works.

I thought the punch line was that biostatistics is actual biology, and biology is statistics :)

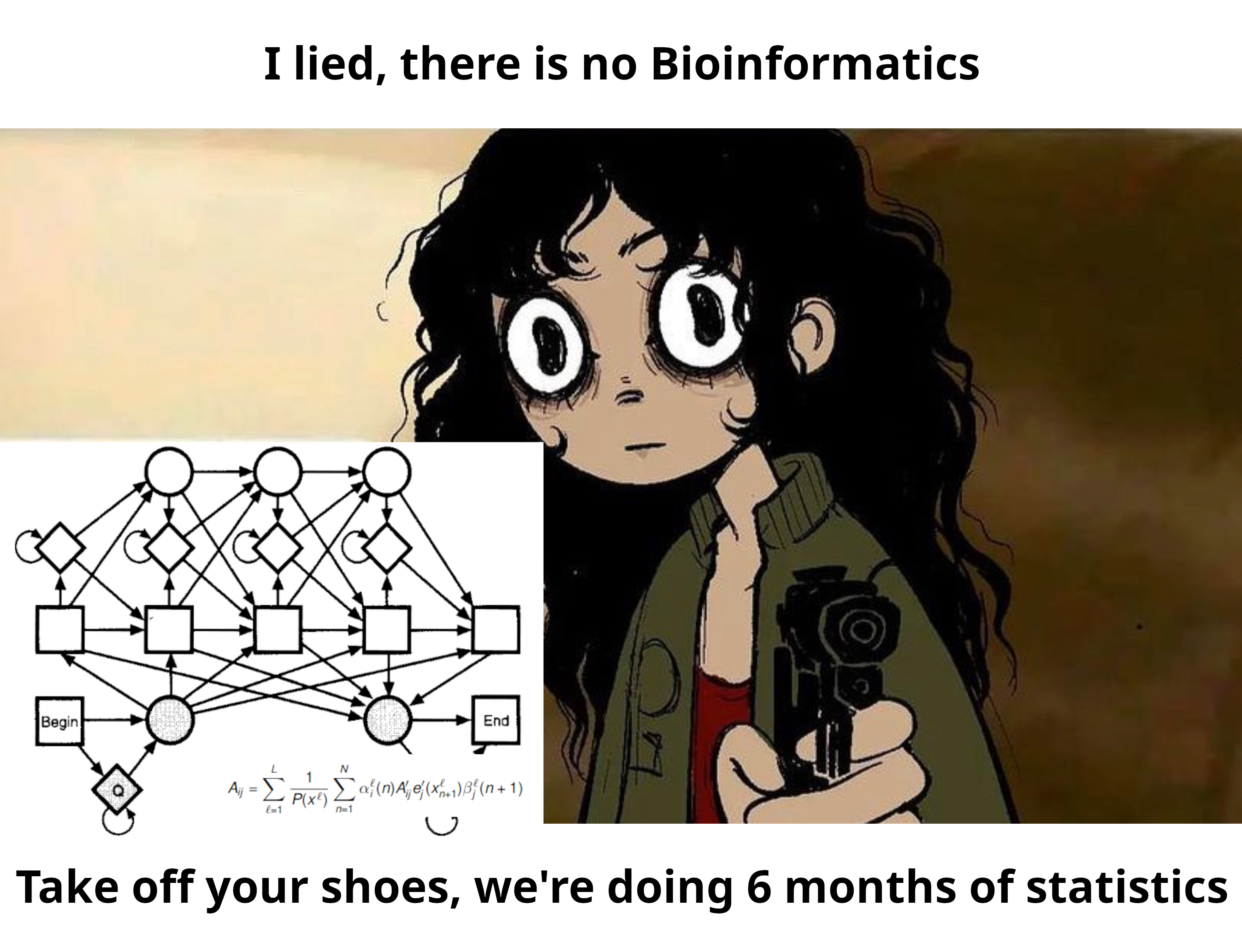

Linear algebra, absolutely. But I kind of hoped to get through my whole degree (mostly EE) without properly knowing statistics. Hah. First I take an elective Intro AI class, and then BioInf. I guess I hate myself.

I'm happy as a cis dude, but I'd be lying if I said I never thought to myself "hey, what if I had a 1m dick? or none? or both at the same time...?"

My legs are okay, and I gained Brouzouf

It's been a few years, but I'll try to remember.

Usually (*), your CPU can address pages (chunks of memory that are assigned to a program) in 4KiB steps. So when it does memory management (shuffle memory pages around, delete them, compress them, swap them to disk...), it does so in chunks of 4KiB. Now, let's say you have a GPU that needs to store data in the memory and sometimes exchange it with the CPU. But the designers knew that it will almost always use huge textures, so they simplified their design and made it able to only access memory in 2MiB chunks. Now each time the CPU manages a chunk of memory for the GPU, it needs to take care that it always lands on a multiple of 2MiB.

If you take fragmentation into account, this leads to all kinds of funny issues. You can get gaps in you memory, because you need to "skip ahead" to the next 2MiB border, or you have a free memory area that is large enough, but does not align to 2MiB...

And it gets even funnier if you have several different devices that have several different alignment requirements. Just one of those neat real-life quirks that can make your nice, clean, theoretical results invalid.

(*): and then there are huge pages, but that is a different can of worms

No, not really. This is from the perspective of a developer/engineer, not an end user. I spent 6 months trying to make $product from $company both cheaper and more robust.

In car terms, you don't have to optimize or even be aware of the injection timings just to drive your car around.

Æcktshually, Windows or any other OS would have similar issues, because the underlying computer science problems are probably practically impossible to solve in an optimal way.

Get a nice cup of tea and calm down. I literally never said or implied any of that. Why do you feel that you need to personally attack me in particular?

All I said was that a supposedly easy topic turned into reading a lot of obscure code and papers which weren't really my field at the time.

For the record, I am well aware that the state of embedded system security is an absolute joke and I'm waiting for the day when it all finally halts and catches fire.

But that was just not the topic of this work. My work was efficient memory management under a lot of (specific) constraints, not memory safety.

Also, the root problem is NP-hard, so good luck finding a universal solution that works within real-life resource (chip space, power, price...) limits.

Except that the degree I did this for was in electrical engineering :(

I wonder if it is possible to confuse the cameras. Figure out what criteria make a price lower (skin color? time of day? no idea), then dress up like that / wear a mask with a face printed on it or something.

Nobody mentioning 3D printing? :(

For those un-enlightened in the ways of making inedible spaghetti: Hair spray is often used as a makeshift adhesive to make your prints stick to the printer and 230°C is conveniently around the temperatures you print most stuff at.

(I know it's not hair spray, but it would be more funny)