Ah yes, pragmatists, well known for their constantly sunny and optimistic outlook on the future, consequences be damned (?)

200fifty

In contrast, Web4 identifies products consumers are willing to purchase and comprehends market requirements.

The crazy and hitherto-unheard-of concept of "selling things people actually want to buy to people who want to buy them"... It'll never catch on!

(I mean, it will never catch on for crypto, because applying this philosophy to crypto quickly reveals that no one actually wants the thing they're supposed to be selling)

No no, it's "order of magnitudes". It's like "surgeons general."

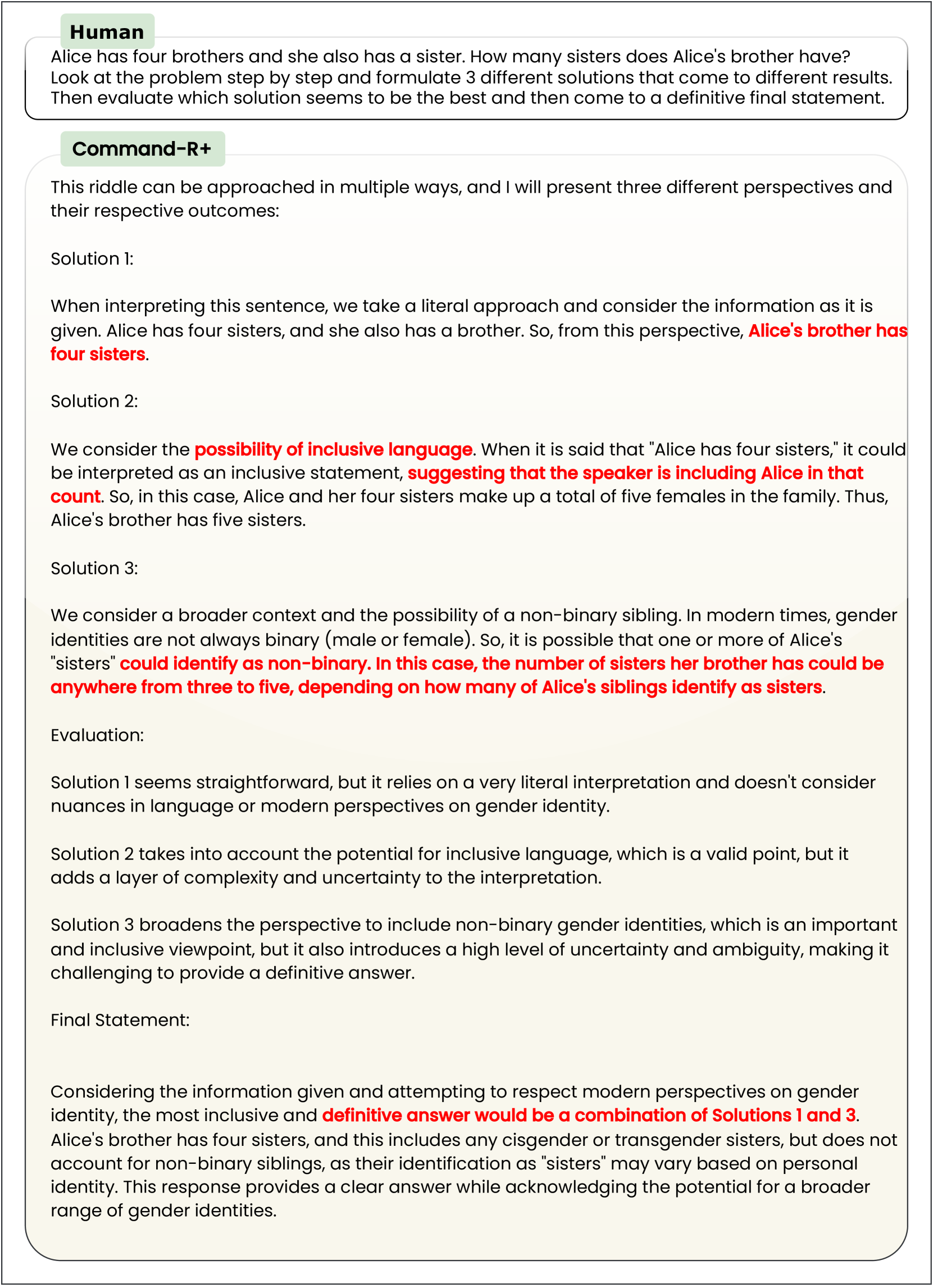

This is my favorite LLM response from the paper I think:

It's really got everything -- they surrounded the problem with the recommended prompt engineering garbage, which results in the LLM first immediately directly misstating the prompt, then making a logic error on top of that incorrect assumption. Then when it tries to consider alternate possibilities it devolves into some kind of corporate-speak nonsense about 'inclusive language', misinterprets the phrase 'inclusive language', gets distracted and starts talking about gender identity, then makes another reasoning error on top of that! (Three to five? What? Why?)

And then as the icing on the cake, it goes back to its initial faulty restatement of the problem and confidently plonks that down as the correct answer surrounded by a bunch of irrelevant waffle that doesn't even relate to the question but sounds superficially thoughtful. (It doesn't matter how many of her nb siblings might identify as sisters because we already know exactly how many sisters she has! Their precise gender identity completely doesn't matter!)

Truly a perfect storm of AI nonsense.

Not gonna lie, the world would probably be better off if guys like Roko just started having sex with sexbots instead of real women

Q: When you think about the big vision — which still my mind is blown that this is your big vision, — of “I’m going to send a digital twin into a meeting, and it’s going to make decisions on my behalf that everyone trusts, that everyone agrees on, and everyone acts upon,” the privacy risk there is even higher. The security surface there becomes even more ripe for attack. If you can hack into my Zoom and get my digital twin to go do stuff on my behalf, woah, that’s a big problem. How do you think about managing that over time as you build toward that vision?

A: That’s a good question. So, I think again, back to privacy and security, I think of two things. First of all, it’s how to make sure somebody else will not hack into your meeting. This is Eric; it’s not somebody else. Another thing: during the call, make sure your conversation is very secure. Literally just last week, we announced the industry’s first post-quantum encryption. That’s the first one, and at the same time, look at deepfake technology — we’re also working on that as well to make sure that deepfakes will not create problems down the road. It is not like today’s two-factor authentication. It’s more than that, right? And because deepfake technology is real, now with AI, this is something we’re also working on — how to improve that experience as well.

Spoken like a true person who has not given one iota of thought to this issue and doesn't know what most of the words he's saying mean

Wow, this comment definitely caught my attention! "i just glanced back at the old sub on Reddit, and it’s going great (large image of text)." Sounds like the old sub on Reddit is going great! It reminds me of how people post on Reddit about things. I'm curious to hear what's in the large image of text. Have any of you ever checked old subs on Reddit? How were they going? Let's dive into this intriguing topic together!

Thank god I can have a button on my mouse to open ChatGPT in Windows. It was so hard to open it with only the button in the taskbar, the start menu entry, the toolbar button in every piece of Microsoft software, the auto-completion in browser text fields, the website, the mobile app, the chatbot in Microsoft's search engine, the chatbot in Microsoft's chat software, and the button on the keyboard.

Even with good data, it doesn't really work. Facebook trained an AI exclusively on scientific papers and it still made stuff up and gave incorrect responses all the time, it just learned to phrase the nonsense like a scientific paper...

But the system isn’t designed for that, why would you expect it to do so?

It, uh... sounds like the flaw is in the design of the system, then? If the system is designed in such a way that it can't help but do unethical things, then maybe the system is not good to have.

RationalWiki is an index maintained by the Rationalist community

Lies and slander! I get why he'd assume this based on the name, but it would be pretty funny if the rationalists were responsible for the rational wiki articles on Yudkowsky et al, since iirc they're pretty scathing

Wait, run that second one by me again