Try a Scrutiny: https://github.com/AnalogJ/scrutiny#docker

Selfhosted

A place to share alternatives to popular online services that can be self-hosted without giving up privacy or locking you into a service you don't control.

Rules:

-

Be civil: we're here to support and learn from one another. Insults won't be tolerated. Flame wars are frowned upon.

-

No spam posting.

-

Posts have to be centered around self-hosting. There are other communities for discussing hardware or home computing. If it's not obvious why your post topic revolves around selfhosting, please include details to make it clear.

-

Don't duplicate the full text of your blog or github here. Just post the link for folks to click.

-

Submission headline should match the article title (don’t cherry-pick information from the title to fit your agenda).

-

No trolling.

Resources:

- selfh.st Newsletter and index of selfhosted software and apps

- awesome-selfhosted software

- awesome-sysadmin resources

- Self-Hosted Podcast from Jupiter Broadcasting

Any issues on the community? Report it using the report flag.

Questions? DM the mods!

Cheers for this, I just bought a stack of new hard drives myself and this is exactly what I didn't know I needed.

Does scrutiny recognize zfs pools?

Scrutiny uses smartctl --scan to detect devices/drives.

https://github.com/AnalogJ/scrutiny#getting-started

It will recognize the block devices but not the filesystem construct. That means ZFS pools themselves are out of scope.

If you want regular automatic hdd checks and don’t need a WebUI I recommend https://github.com/smartmontools

So just as an FYI to those who trust these sorts of things, SMART technology is a self reporting thing. The hard drive is more than capable of lying to the data in that system if it protects the manufacture from responsibility of replacing faulty drives. Whats more, it's actually pretty rare that SMART reports and issue before the drive just sorta... dies someway or another.

It's not useless technology, but it's pretty damn close. I don't even both with any of my setups. I test it by monitoring if the server has issues reading/writing. SMART wont tell me anything before that will.

Source: Was a firmware engineer on hard drives for 10 years.

SMART value monitoring helped me finding faulty drives, not only once. And drives are tested before adding to a production system.

Certainly system drives are separate from data drives. The latter can be perfectly monitored by SMART values.

Have done years of enterprise fault analysis, I promise you that SMART will happily tell you there is a problem at the same time you begin to experience data corruption. You might get lucky and catch and altered sector count spike up, or a temperature value go out of family, but in the field those things really suck at predictions.

If you want to know if a drive is healthy, track data corruption at the file system layer.

I think you can’t track data corruption either because you will find out only when it occurred. Same is valid for SMART values as you correctly state.

I believe it is a mix of using zfs, ECC and SMART monitoring.

https://phoenixnap.com/blog/data-corruption

Thanks for clarifying the intricacies connected to SMART monitoring.

Okay, but how do you monitor the issues with reading and writing?

If I care about the data? It’s on a file system which reports file corruption.

Otherwise? I don’t trust it at all. I back it up and replace the drive when it dies.

A file system that reports file corruption. I believe ZFS is one of those? I'm not really familiar with how that works

That’s what I use personally, I’ve seen the feature elsewhere too.

There's an app called scrutiny that does exactly what you are looking for

I just have a shell-script running daily that runs the smartctl short test and checks certain S.M.A.R.T. values and pings healthchecks.io when successful. Easy and seems to do the job.

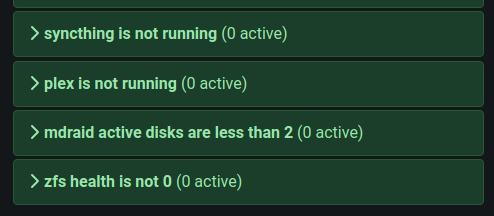

Ingredients

- A 10-line Python script that parses the

zpool statusand looks for errors - A systemd one-shot service that runs it and puts the output into node_exporter

- A systemd timer that runs the service every 5 minutes

- A Prometheus container that among other things has an alert for changes in the zpool health data

Result

Once this is setup, it's trivial to add other checks like smartmontools, etc. If you add Alertmanager, you can get emails and other alerts sent to you while you're not looking.

smartd + email to mailrise which sends notifications to a self hosted gotify instance. I then get notifications through the gotify mobile app and on the web interface.