I don't see the US restricting AI development. No matter what is morally right or wrong, this is strategically important, and they won't kneecap themselves in the global competition.

Technology

A nice place to discuss rumors, happenings, innovations, and challenges in the technology sphere. We also welcome discussions on the intersections of technology and society. If it’s technological news or discussion of technology, it probably belongs here.

Remember the overriding ethos on Beehaw: Be(e) Nice. Each user you encounter here is a person, and should be treated with kindness (even if they’re wrong, or use a Linux distro you don’t like). Personal attacks will not be tolerated.

Subcommunities on Beehaw:

This community's icon was made by Aaron Schneider, under the CC-BY-NC-SA 4.0 license.

Strategically important how exactly?

Great power competition / military industrial complex . AI is a pretty vague term, but practically it could be used to describe drone swarming technology, cyber warfare, etc.

LLM-based chatbot and image generators are the types of "AI" that rely on stealing people's intellectual property. I'm struggling to see how that applies to "drone swarming technology." The only obvious use case is in the generation of propaganda.

You could use LLM like AI to go through vast amounts of combat data to make sense of it on the field and analyze data from mass surveillance. I doubt they need much more excuses.

Case could be made tech bros have overhyped the importance of AI to military industrial complex but it nevertheless has plenty of nasty uses.

The only obvious use case is in the generation of propaganda.

It is indeed. I would guess that's the game, and is already happening.

Luddites smashing power looms.

It's worth remembering that the Luddites were not against technology. They were against technology that replaced workers, without compensating them for the loss, so the owners of the technology could profit.

Moreover, Luddites were opposed to the replacement of independent at-home workers by oppressed factory child labourers. Much like OpenAI aims to replace creative professionals by an army of precarious poorly paid microworkers.

Yep! And it's not like a lot of creative professionals are paid all that well right now. The tech and finance industries do not value creatives.

"Starving artist"

Obviously I can't speak for all countries, but in mine, an artist and a programmer with the same years of experience working for the same company will not be getting the same salary, despite the fact that neither could do the other's job. One of those salaries will be slightly above minimum wage (which is currently lower than the wage needed to cover the cost of living), and the other will be around double the national average wage. So there are in fact artists using food banks right now, and it's not because the creatives aren't working as hard as the tech professionals. One is simply valued higher than the other.

Meritocracy is a myth used to control people.

Their problem was that they smashed too many looms and not enough capitalists. AI training isn't just for big corporations. We shouldn’t applaud people that put up barriers that will make it prohibitively expensive to for regular people to keep up. This will only help the rich and give corporations control over a public technology.

It should be prohibitively expensive for anyone to steal from regular people, whether it's big companies or other regular people. I'm not more enthusiastic about the idea of people stealing from artists to create open source AIs than I am when corporations do it. For an open source AI to be worth the name, it would have to use only open source training data - ie, stuff that is in the public domain or has been specifically had an open source licence assigned to it. If the creator hasn't said they're okay with their content being used for AI training, then it's not valid for use in an open source AI.

I recommend reading this article by Kit Walsh, a senior staff attorney at the EFF if you haven't already. The EFF is a digital rights group who most recently won a historic case: border guards now need a warrant to search your phone.

People are trying to conjour up new rights to take another piece of the public's right and access to information. To fashion themselves as new owner class. Artists and everyone else should accept that others have the same rights as they do, and they can't now take those opportunities from other people because it's their turn now.

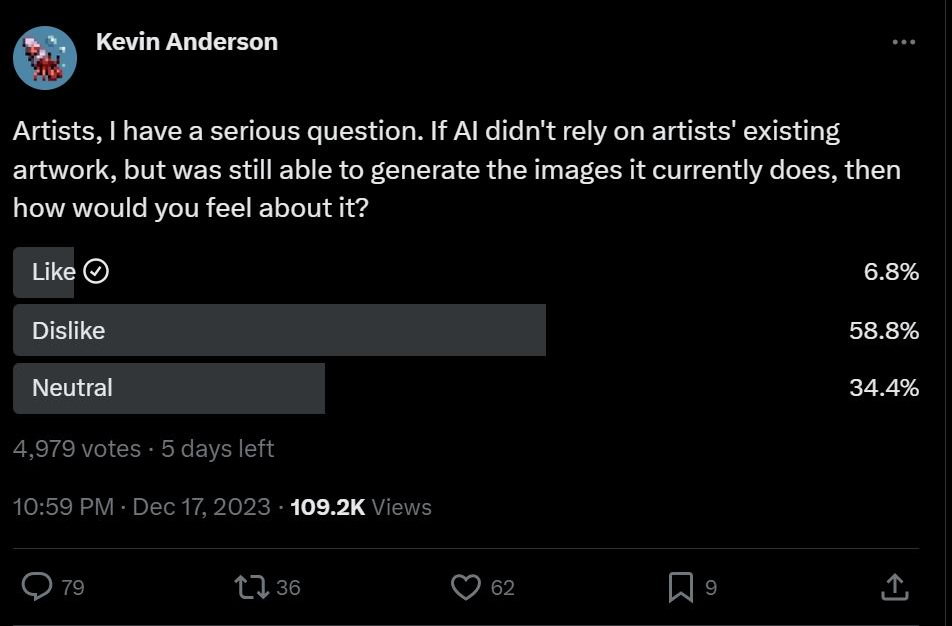

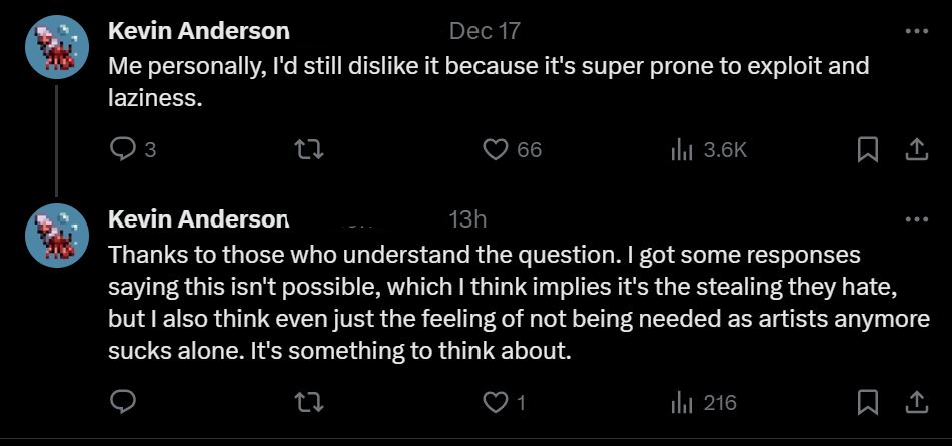

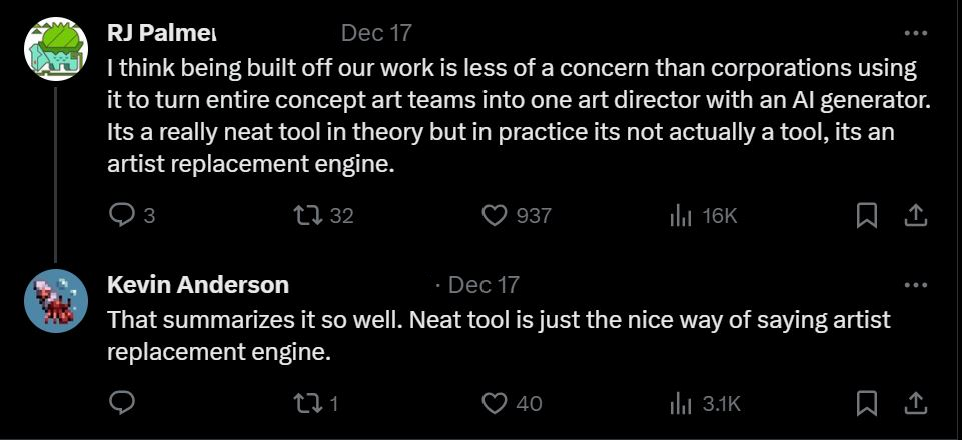

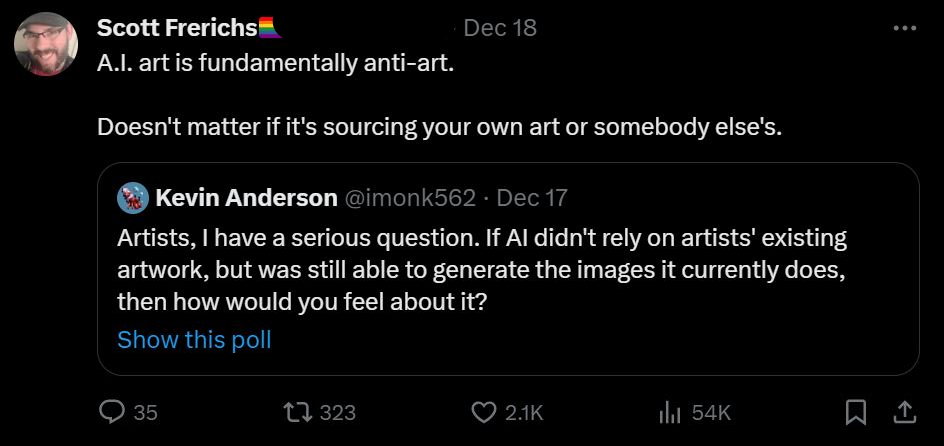

There's already a model trained on just Creative Commons licensed data, but you don't see them promoting it. That's because it was not about the data, it's an attack on their status, and when asked about generators that didn't use their art, they came out overwhelmingly against with the same condescending and reductive takes they've been using this whole time.

I believe that generative art, warts and all, is a vital new form of art that is shaking things up, challenging preconceptions, and getting people angry - just like art should.

I'm actually fine with generative AI that uses only public domain and creative commons content. I'm not threatened by AI as a creative, because AI can only iterate on its own training data. Only humans can create something genuinely new and original. My objection is solely on the basis of theft. If we agree that everybody has the basic right to control their own data and content, than that logically has to extend to artists: they must have the right to control their own work, and consenting to humans viewing it isn't the same as consenting to having it fed into an AI.

I suspect there would be a lot more artists open to considering the benefits of a generative AI using only public domain and creative commons works if they weren't justifiably aggrieved at having their life's work strip-mined. Expecting the victims of exploitation to be 100% rational about their exploiter (or other adjacent parties trying to argue why it's fine when they do it) isn't reasonable. At this point, artists simply don't trust the generative AI industry, and there needs to be a significant and concerted effort to rectify existing wrongs to repair that trust. One organisation offering a model based on creative commons artworks, when the rest of the generative AI industry is still stealing everything that's not nailed down, does not promote trust. Regulate, compensate, mend some fences, and build trust. Then go and talk to artists, and have the conversations that should have been had before the first AI models were built. The AI industry needs to prove it can be trusted, and then learn to ask for permission. Then, maybe, it can ask for forgiveness.

I’m actually fine with generative AI that uses only public domain and creative commons content. I’m not threatened by AI as a creative, because AI can only iterate on its own training data. Only humans can create something genuinely new and original.

I don't like this kind of thought because it tries to minimize the role of the person at the controls. There is no reason why a person using a model trained on 1400s art, African art, anime, photography, cubism, sculpture, cullinary art, impressionism, nature, and ancient Greco-Roman etc. wouldn't be able to come up with novel concepts, executions, and styles, since it's very much a combination of styles that gives rise to new types of art in all other mediums. And that's before you even start fine-tuning on your own stuff.

My objection is solely on the basis of theft. If we agree that everybody has the basic right to control their own data and content, than that logically has to extend to artists: they must have the right to control their own work, and consenting to humans viewing it isn’t the same as consenting to having it fed into an AI.

It isn't like a human viewing it, but it is very like other protected uses of data. To quote the article:

Fair use protects reverse engineering, indexing for search engines, and other forms of analysis that create new knowledge about works or bodies of works. Here, the fact that the model is used to create new works weighs in favor of fair use as does the fact that the model consists of original analysis of the training images in comparison with one another.

This is just a way to analyze and reverse engineer concepts in images so you can make your own original works. Reverse engineering has been fair use since Sega Enterprises Ltd. v. Accolade, Inc in 1992, and then affirmed in Sony Computer Entertainment, Inc. v. Connectix Corporation.

In the US, fair use balances the interests of copyright holders with the public’s right to access and use information. There are rights people can maintain over their work, and the rights they do not maintain have always been to the benefit of self-expression and discussion. There are just some things you can't stop people from doing with things you've shared with them, and we shouldn’t be trying to change that.

Calling this stealing is self-serving, manipulative rhetoric that unjustly vilifies people and misrepresents the reality of how these models work and how creative the people who use them can be.

If the models were purely being used for research, I might buy the argument that fair use applies. But the fair use balancing act also incorporates an element of whether the usage is commercial in nature and is intended to compete with the rights holder in a way that affects their livelihood. Taking an artist's work in order to mass produce pieces that replicates their style, in such a way that it prevents the artist from earning a living, definitely affects their livelihood, so there is a very solid argument that fair use ceased to apply when the generative AI entered commercial use. The people that made the AI models aren't engaging in self-expression at this point. The users of the AI models may be, but they're not the ones that used all the art without consent or compensation. The companies running the AI models are engaged purely in profit-seeking, making money from other people's work. That's not self-expression and it's not discussion. It's greed.

Although the courts ruled that reverse engineering software to make an emulator was fair use, it's worth bearing in mind that the emulator is intended to allow people to continue using software they have purchased after the lifespan of the console has elapsed - so the existence of an emulator is preserving consumers' rights to use the games they legally own. Taking artists' work to create an AI so you no longer need the artist has more in common with pirating the games rather than creating an emulator. You're not trying to preserve access to something you already have a licence to use. An AI isn't replacing artwork that you have the right to use but that you can no longer access because of changing hardware. AI is allowing you to use an artist's work in order to cut them out of the equation without you ever paying them for the work you have benefitted from.

The AI models can combine concepts in new ways, but it still can't create anything truly new. An AI could never have given us something like Cubism, for example, because visually nothing like it had ever existed before, so there would have been nothing in its training data that could have made anything like it. What a human brings to the process is life experience and an emotional component that an AI lacks. All an AI can do is combine existing concepts into new combinations (like combining fried eggs and flowers - both of those objects are existing concepts). It can't create entirely new things that aren't represented somewhere in its training data. If it didn't know what fried eggs and flowers were, it would be unable to create them.

If the models were purely being used for research, I might buy the argument that fair use applies. But the fair use balancing act also incorporates an element of whether the usage is commercial in nature and is intended to compete with the rights holder in a way that affects their livelihood. Taking an artist’s work in order to mass produce pieces that replicates their style, in such a way that it prevents the artist from earning a living, definitely affects their livelihood, so there is a very solid argument that fair use ceased to apply when the generative AI entered commercial use.

Fair Use also protects commercial endeavors. Fair use is a flexible and context-dependent doctrine based on careful analysis of four factors: the purpose and character of the use, the nature of the copyrighted work, the amount and substantiality of the portion used, and the effect of the use upon the potential market. No one factor is more important than the others, and it is possible to have a fair use defense even if you do not meet all the criteria of fair use.

More importantly, I don't think more works in a style would prevent an artist from making a living. IMO, it could serve as an ad to point people where to get "the genuine article".

The people that made the AI models aren’t engaging in self-expression at this point. The users of the AI models may be, but they’re not the ones that used all the art without consent or compensation. The companies running the AI models are engaged purely in profit-seeking, making money from other people’s work. That’s not self-expression and it’s not discussion. It’s greed.

Agreed, but don't forget that there are plenty of regular people training their own models and offering them to everyone for free who don't have a company apparatus to defend them, and they are targeted and not spared this ire.

Although the courts ruled that reverse engineering software to make an emulator was fair use, it’s worth bearing in mind that the emulator is intended to allow people to continue using software they have purchased after the lifespan of the console has elapsed - so the existence of an emulator is preserving consumers’ rights to use the games they legally own. Taking artists’ work to create an AI so you no longer need the artist has more in common with pirating the games rather than creating an emulator. You’re not trying to preserve access to something you already have a licence to use. An AI isn’t replacing artwork that you have the right to use but that you can no longer access because of changing hardware. AI is allowing you to use an artist’s work in order to cut them out of the equation without you ever paying them for the work you have benefitted from.

Making novel works has nothing in common with reproducing and distributed someone else's creation. It is preserving the public's rights to self-expression, no matter the medium. No artist can insert themselves in the conversation over a style to try to collect payment. Imagine if every one of their inspirations did the same to them?

The AI models can combine concepts in new ways, but it still can’t create anything truly new. An AI could never have given us something like Cubism, for example, because visually nothing like it had ever existed before, so there would have been nothing in its training data that could have made anything like it.

Cubism is, in part, a combination of the simplified and angular forms of ancient Iberian sculptures, the stylized and abstract features of African tribal masks, and the flat and decorative compositions of Japanese prints. Nothing like it existed before because no one had combined those specific concepts quite yet.

What a human brings to the process is life experience and an emotional component that an AI lacks.

It is still a human making generative art, and they can use their emotions and learned experiences to guide the creation of works. The model is just a tool to be harnessed by people.

All an AI can do is combine existing concepts into new combinations (like combining fried eggs and flowers - both of those objects are existing concepts). It can’t create entirely new things that aren’t represented somewhere in its training data. If it didn’t know what fried eggs and flowers were, it would be unable to create them.

New tools have already made what you're talking about possible, and they will continue to improve.

I think we're very much in "agree to disagree" territory here.

Yeah, I just want to let you know where I'm coming from. I hope I've made that clear.

If successful, he could force the companies responsible for applications such as ChatGPT or Midjourney to compensate thousands of creators. They may even have to retire their algorithms and retrain them with databases that don’t infringe on intellectual property rights.

They will readily agree to this after having made their money and use their ill gotten gains to train a new model. The rest of us will have to go pound sand as making a new model will have been made prohibitively expensive. Good intentions, but it will only help them by pulling up the ladder behind them.

It wouldn't be pulling up the ladder behind them if we force them to step down that ladder and burn it by retraining their models from scratch "with databases that don't infringe on intellectual property rights".

I’m an artist and I can guarantee his lawsuits will accomplish jack squat for people like me. In fact, if successful, it will likely hurt artists trying to adapt to AI. Let’s be serious here, copyright doesn’t really protect artists, it’s a club for corporations to swing around to control our culture. AI isn’t the problem, capitalism is.

Dumbass. YouTube has single-handedly proven how broken the copyright system is and this dick wants to make it worse. There needs to be a fair-er rebalancing of how people are compensated and for how long.

What exactly that looks like I'm not sure but I do know that upholding the current system is not the answer.