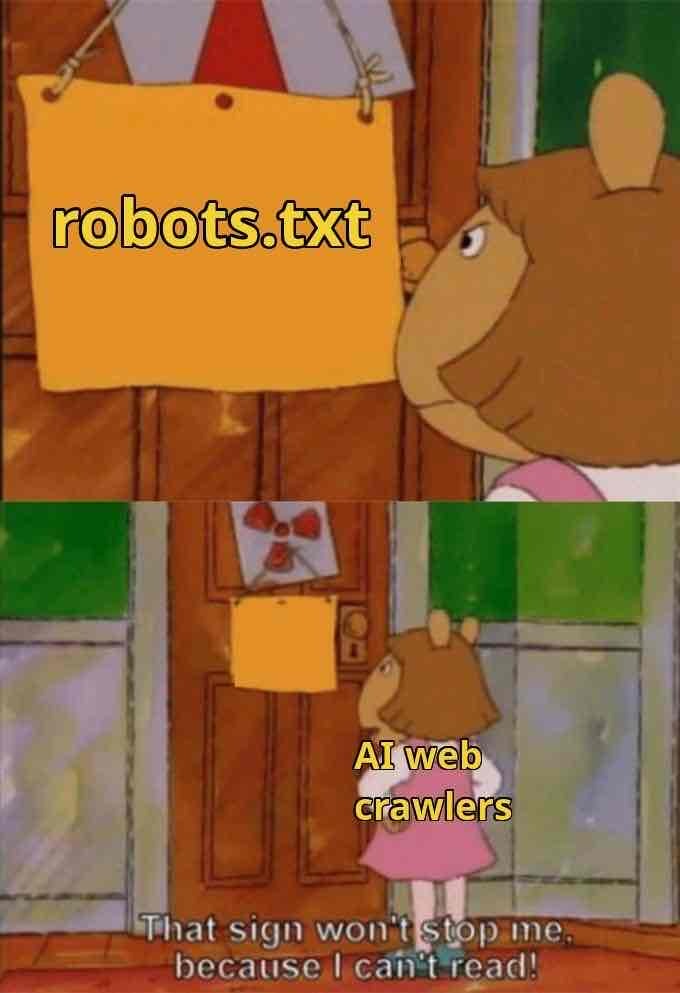

TikTok spider has been a real offender for me. For one site I host it burred through 3TB of data over 2 months requesting the same 500 images over and over. It was ignoring the robots.txt too, I ended up having to block their user agent.

Lemmy Shitpost

Welcome to Lemmy Shitpost. Here you can shitpost to your hearts content.

Anything and everything goes. Memes, Jokes, Vents and Banter. Though we still have to comply with lemmy.world instance rules. So behave!

Rules:

1. Be Respectful

Refrain from using harmful language pertaining to a protected characteristic: e.g. race, gender, sexuality, disability or religion.

Refrain from being argumentative when responding or commenting to posts/replies. Personal attacks are not welcome here.

...

2. No Illegal Content

Content that violates the law. Any post/comment found to be in breach of common law will be removed and given to the authorities if required.

That means:

-No promoting violence/threats against any individuals

-No CSA content or Revenge Porn

-No sharing private/personal information (Doxxing)

...

3. No Spam

Posting the same post, no matter the intent is against the rules.

-If you have posted content, please refrain from re-posting said content within this community.

-Do not spam posts with intent to harass, annoy, bully, advertise, scam or harm this community.

-No posting Scams/Advertisements/Phishing Links/IP Grabbers

-No Bots, Bots will be banned from the community.

...

4. No Porn/Explicit

Content

-Do not post explicit content. Lemmy.World is not the instance for NSFW content.

-Do not post Gore or Shock Content.

...

5. No Enciting Harassment,

Brigading, Doxxing or Witch Hunts

-Do not Brigade other Communities

-No calls to action against other communities/users within Lemmy or outside of Lemmy.

-No Witch Hunts against users/communities.

-No content that harasses members within or outside of the community.

...

6. NSFW should be behind NSFW tags.

-Content that is NSFW should be behind NSFW tags.

-Content that might be distressing should be kept behind NSFW tags.

...

If you see content that is a breach of the rules, please flag and report the comment and a moderator will take action where they can.

Also check out:

Partnered Communities:

1.Memes

10.LinuxMemes (Linux themed memes)

Reach out to

All communities included on the sidebar are to be made in compliance with the instance rules. Striker

Are you sure the caching headers your server is sending for those images are correct? If your server is telling the client to not cache the images, it'll hit the URL again every time.

If the image at a particular URL will never change (for example, if your build system inserts a hash into the file name), you can use a far-future expires header to tell clients to cache it indefinitely (e.g. expires max in Nginx).

Thanks for the suggestion, turns out there are no cache headers on these images. They indeed never change, I’ll try that update. Thanks again

Perfect use of the meme

time to fill sites code with randomly generated garbage text that humans will not see but crawlers will gobble up?

I don't think it's a bad idea but it's largely dependent on the crawler. I can't speak for AI based crawlers, but typical scraping targets specific elements on a page or grabbing the whole page and parsing it for what you're looking for. In both instances, your content is already scrapped and added to the pile. Overall, I have to wonder how long "poisoning the water well" is going to work. You can take me with a grain of salt, though; I work on detecting bots for a living.

I work on detecting bots for a living.

You should just tell people you're a blade runner.

I'm a blade runner. 😁

You see a turtle, upended on the hot asphalt. As you pass it, you do not stop to help. Why is that?

also that job title is cool as fuck

I agree and I wish I was actually that cool. I just look at data all day and write rules. 🫠

Until you realize that you are paying for access fees/network

Accessibility tho

All this robot.txt stuff "perplex" me.

She should get a library card.

She tried but she couldn't read the application.

TBF, pushing a site to the public while adding a "no scrapping" rule is a bit of a shitty practice; and pushing it and adding a "no scrapping, unless you are Google" is a giant shitty practice.

Rules for politely scrapping the site are fine. But then, there will be always people that disobey those, so you must also actively enforce those rules too. So I'm not sure robots.txt is really useful at all.

No it's not, what a weird take. If I publish my art online for enthusiasts to see it's not automatically licensed to everyone to distribute. If I specifically want to forbid entities I have huge ethical issues with (such as Google, OpenAI et. al.) from scraping and transforming my work, I should be able to.

How would a site make itself acessible to the internet in general while also not allowing itself to be scraped using technology?

robots.txt does rely on being respected, just like no tresspassing signs. The lack of enforcement is the problem, and keeping robots.txt to track the permissions would make it effective again.

I am agreeing, just with a slightky different take.

User agent catching is rather effective. You can serve different responses based on UA.

So generally people will use a robots.txt to catch the bots that play nice and then use useragents to manage abusers.

People really should be providing a sitemap.xml file