this post was submitted on 16 Feb 2024

995 points (99.2% liked)

linuxmemes

21238 readers

1180 users here now

Hint: :q!

Sister communities:

- LemmyMemes: Memes

- LemmyShitpost: Anything and everything goes.

- RISA: Star Trek memes and shitposts

Community rules (click to expand)

1. Follow the site-wide rules

- Instance-wide TOS: https://legal.lemmy.world/tos/

- Lemmy code of conduct: https://join-lemmy.org/docs/code_of_conduct.html

2. Be civil

- Understand the difference between a joke and an insult.

- Do not harrass or attack members of the community for any reason.

- Leave remarks of "peasantry" to the PCMR community. If you dislike an OS/service/application, attack the thing you dislike, not the individuals who use it. Some people may not have a choice.

- Bigotry will not be tolerated.

- These rules are somewhat loosened when the subject is a public figure. Still, do not attack their person or incite harrassment.

3. Post Linux-related content

- Including Unix and BSD.

- Non-Linux content is acceptable as long as it makes a reference to Linux. For example, the poorly made mockery of

sudoin Windows. - No porn. Even if you watch it on a Linux machine.

4. No recent reposts

- Everybody uses Arch btw, can't quit Vim, and wants to interject for a moment. You can stop now.

Please report posts and comments that break these rules!

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

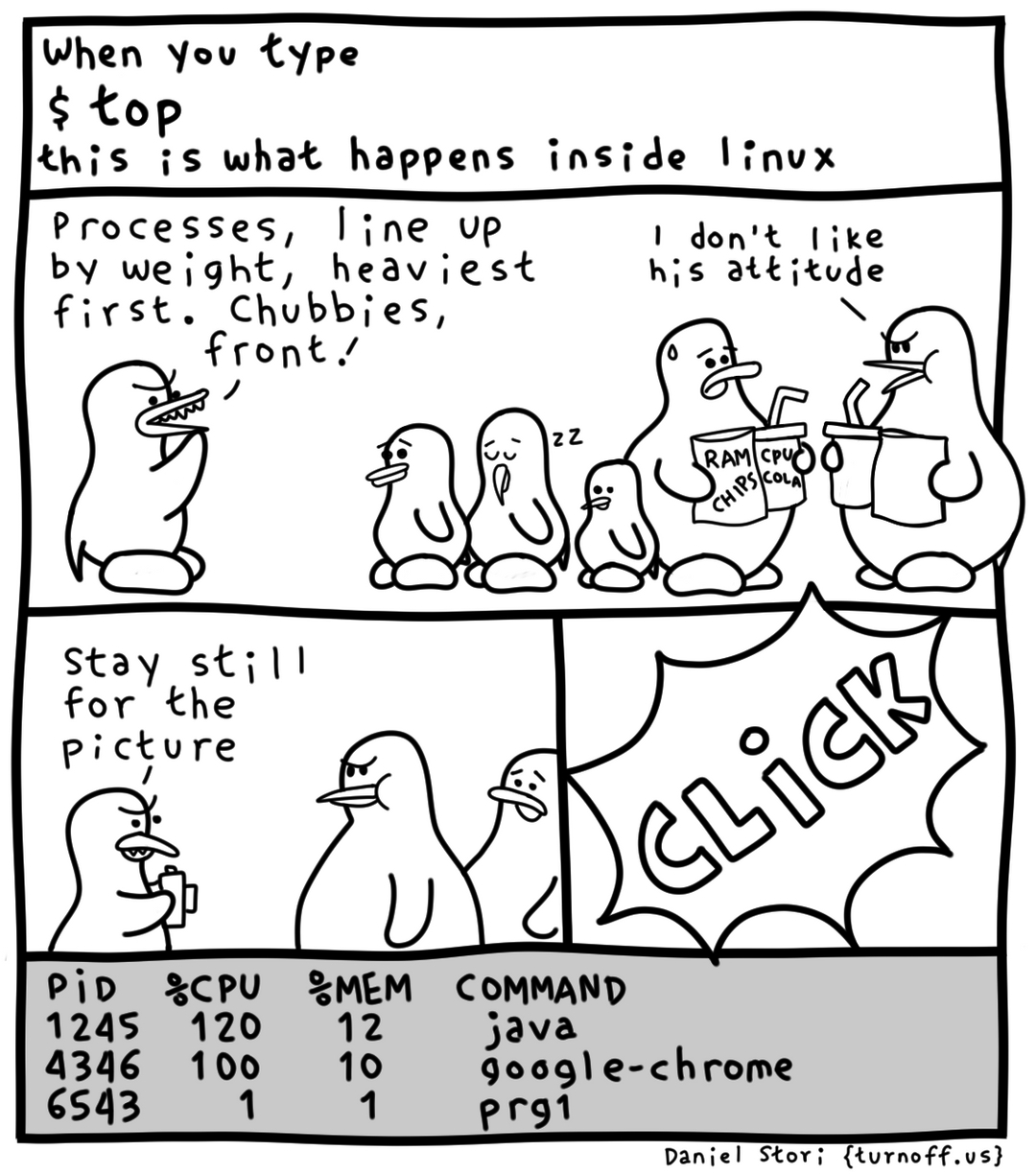

While I have no specific examples, that is the task of scheduling algorithms. The kernel is responsible for looking at running processes and figuring out how to assign it CPU time efficiently. That can include a variety of metrics, such as past behavior, if it is IO blocked, process priority, etc.

There is no perfect scheduling algorithm. Each one has tradeoffs depending on what the priority of the system is.

Also, you don't have to relinquish all control to the process if you have multiple cores. If you do, I believe that the process is interrupted after some time to allow the kernel to always be able to check in, but again, it depends on implementation.

That is called context switching. Simplified, a process is a list of instructions and a bundle of memory. The memory is composed of RAM and CPU registers (again, simplified). The process memory can stay in the same spot in RAM, but the registers need to move out of the way for another process to take its spot. When a process is yanked away, the state of the registers for that process is snapshotted and stored in RAM managed by the kernel. This allows another process to be allocated to that core without deleting important process data. To resume the paused process, you just need to restore the registers back to the snapshotted state and have the core execute the next instruction for that process, and the process would be none the wiser.

Of course, there's a lot more that happens internally, but that's the main gist.

Is there a perfect scheduler that is non-optimal in the Big(O) sense but is optimal if you're looking at maximizing hardware utilization? In other words, scheduler that takes a long time to determine CPU utilization for each process, but provides an optimal total CPU utilization? I realize that it would not be ideal since we'd essentially have these "sudden stops" as it recalculates the schedule. I'm just more interested in the theory.

If you have a fixed collection of processes to run on a single processor and unlimited time to schedule them in, you can always brute force all permutations of the processes and then pick whichever permutation maximizes and/or minimizes whatever property you like. The problem with this approach is that it has awful time complexity.

Edit: There's probably other subtle issues that can arise, like I/O interrupts and other weird events fwiw.

How would you deal with iowaits in a system like that? I can perfectly burn 100% of CPU time running a poll(), but that’s not useful work…

¯\_(ツ)_/¯

I guess that's why I asked. I'm just curious if it's even possible.