this post was submitted on 08 Feb 2024

915 points (94.8% liked)

Programmer Humor

32453 readers

355 users here now

Post funny things about programming here! (Or just rant about your favourite programming language.)

Rules:

- Posts must be relevant to programming, programmers, or computer science.

- No NSFW content.

- Jokes must be in good taste. No hate speech, bigotry, etc.

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

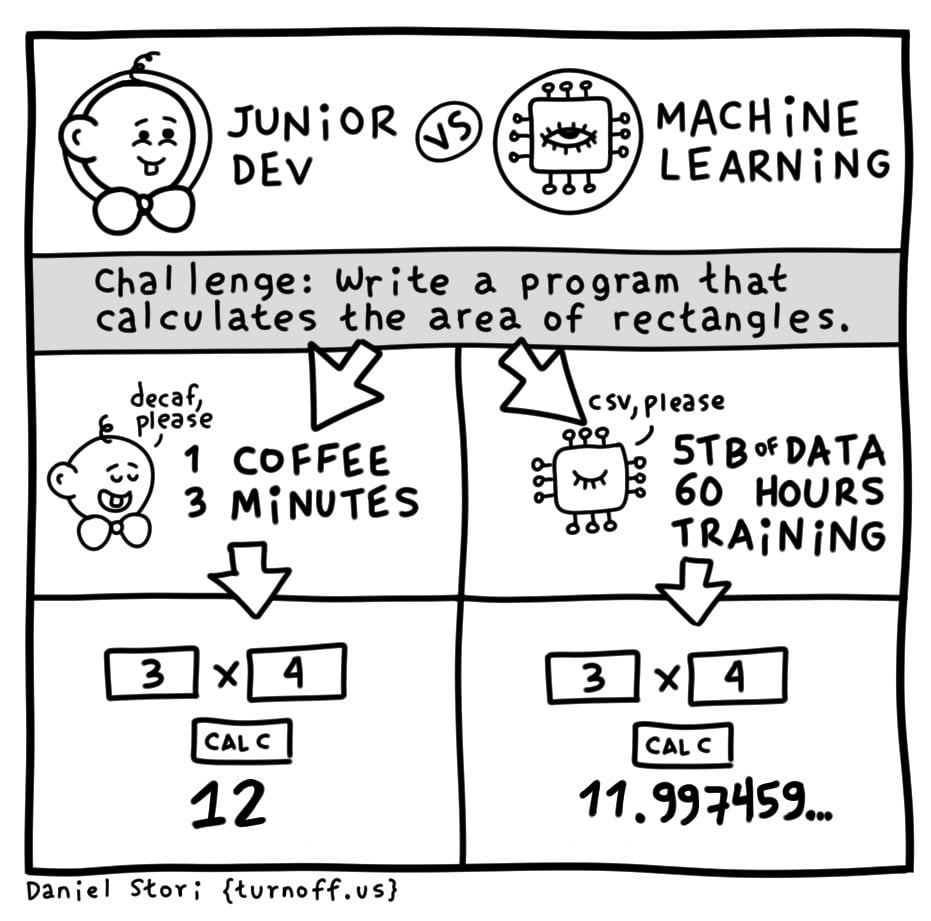

Ahh the future of dev. Having to compete with AI and LLMs, while also being forced to hastily build apps that use those things, until those things can build the app themselves.

Let's invent a thing inventor, said the thing inventor inventor after being invented by a thing inventor.

You could make a religion out of this.

The sun is a deadly laser.

A recursive religion! I'm in!

And also, as a developer, you have to deal with the way Star Trek just isn't as good as it used to be.

Because you're all fucking nerds.

(Me too tho)

SNW has been thoroughly enjoyable so far.

I mean if you have access but are not using Copilot at work you're just slowing yourself down. It works extremely well for boilerplate/repetitive declarations.

I've been working with third party APIs recently and have written some wrappers around them. Generally by the 3rd method it's correctly autosuggesting the entire method given only a name, and I can point out mistakes in English or quickly fix them myself. It also makes working in languages I'm not familiar with way easier.

AI for assistance in programming is one of the most productive uses for it.

Oh I use Copilot daily. It fills the gaps for the repetitive stuff like you said. I was writing Stories in a Storybook.js project once and was able to make it auto-suggest the remainder of my entire component states after writing 2-3. They worked out of the gate too with maybe a single variable change. Initially, I wasn’t even going to do all of them in that coding session just to save time and get it handed off, but it was giving me such complete suggestions that I was able to build every single one out with interaction tests and everything.

Outside of use cases like that and getting very general content, I think AI is a mess. I’ve worked with ChatGPT’s v3.5-4 API a ton and it’s unpredictable and hard to instruct sometimes. Prompts and approaches that worked 2 weeks ago, will now suddenly give you some weird edge case that you just can’t get it to stop repeating—even when using approaches that worked flawlessly for others. It’s like trying to patch a boat while you’re in it.

The C suite people and suits jumped on AI way too early and have haphazardly forced it into every corner. It’s become a solution searching for a problem. The other day, a friend of mine said he had a client that casually asked how they were going to use AI on the website they were building for them, like it was just a commonplace thing. The buzzword has gotten ahead of itself and now we’re trying to reel it back down to earth.

@JDubbleu

https://visualstudiomagazine.com/articles/2024/01/25/copilot-research.aspx

That was a pretty interesting read. However, I think it's attributing correlation and causation a little too strongly. The overall vibe of the article was that developers who use Copilot are writing worse code across the board. I don't necessarily think this is the case for a few reasons.

The first is that Copilot is just a tool and just like any tool it can easily be misused. It definitely makes programming accessible to people who it would not have been accessible to before. We have to keep in mind that it is allowing a lot of people who are very new to programming to make massive programs that they otherwise would not have been able to make. It's also going to be relied on more heavily by those who are newer because it's a more useful tool to them, but it will also allow them to learn more quickly.

The second is that they use a graph with an unlabeled y-axis to show an increase in reverts, and then never mention any indication of whether it is raw lines of code or percentage of lines of code. This is a problem because copilot allows people to write a fuck ton more code. Like it legitimately makes me write at least 40% more. Any increase in revisions are simply a function of writing more code. I actually feel like it leads to me reverting a lesser percentage of lines of code because it forces me to reread the code that the AI outputs multiple times to ensure its validity.

This ultimately comes down to the developer who's using the AI. It shouldn't be writing massive complex functions. It's just an advanced, context-aware autocomplete that happens to save a ton of typing. Sure, you can let it run off and write massive parts of your code base, but that's akin to hitting the next word suggestion on your phone keyboard a few dozen times and expecting something coherent.

I don't see it much differently than when high level languages first became a thing. The introduction of Python allowed a lot of people who would never have written code in their life to immediately jump in and be productive. They both provide accessibility to more people than the tools before them, and I don't think that's a bad thing even if there are some negative side effects. Besides, in anything that really matters there should be thorough code reviews and strict standards. If janky AI generated code is getting into production that is a process issue, not a tooling issue.