this post was submitted on 29 Jan 2024

544 points (97.7% liked)

ADHD memes

8319 readers

745 users here now

ADHD Memes

The lighter side of ADHD

Rules

Other ND communities

- ADHD - Generic discussion

- Ausome Memes

- Autism

- AuDHD

- Neurodivergence

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

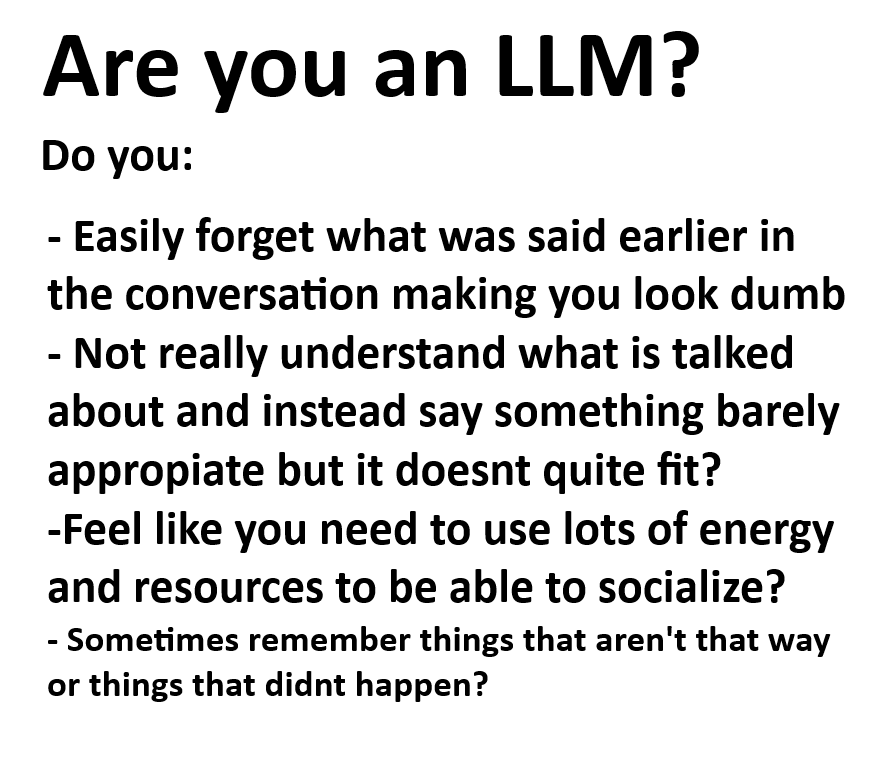

I feel this in my soul. Imagine having the potential to have almost any question in the world in a matter of just a few seconds and instead of praising what a technological feat this is, people are like "it draws just as good as me in less than 60 seconds?!?! REEEEE!"🙄🤦♂️

Edit: lol. I expressed this poorly. I meant to say that while it's not accurate enough yet to have almost any question in the world answered in just a few seconds, but I'm willing to bet within 5 years accuracy will be close to, if not better than traditional search engines.

Leaving my original dumbassery up. Everyone says stupid shit at some point. And some say stupid shit more than others. I'm probably the latter.🤷♂️😬

We've had that for almost 20 years with wikipedia. People are just too dumb and lazy to use it. It's more reliable that chatgpt but people will denounce wikipedia as unreliable while still using chatgpt.

Well, even Wikipedia doesn't have some things. I portrayed what I was attempting to say wrongly anyhow. I meant that it will EVENTUALLY get to be really accurate. Within the next 5 years I think we'll be able to rely on it better than search engines or Wikipedia.🤷♂️this is just my prediction and opinion.

This is like saying people who use cars are "just too lazy to walk." Or people who use their GPS navigation are "too lazy to use a map."

The amount of time and effort matters.

It's like searching for a picture of Prague, seeing a drawing of Delhi, and then concluding you've been there. It's not about laziness. It's about accuracy.

Yeah, we're not there yet, but the way things are going, I don't see it being THAT far off. Maybe within 5 years it'll be as accurate as anything else.

Yes, I think if we can get an LLM to work while providing high quality, real world sources it will be a game changing technology across domains. As it stands though, it's like believing a magician really does magic. The tricks they employ are incredibly useful in a magic show, but if you expect them to really cast a fireball in your defense, you'll be sorely mistaken.

Huh, I was under the opinion that ChatGPT cited it's sources. I know others do it.

Did you read my comment at all? I was replying to a comment about the level of effort, which is what my analogy addresses.

Your hyperbole not withstanding, if the accuracy isnt good enough for you, dont use it. Lots of people find that LLMs are useful even in their current state of imperfect accuracy.

Did you read mine? If you wanted a depiction of a city, it's more than good enough. In fact it's amazing what it can do in that respect. My point is: it gets major details wrong in a way that feels right. That's where the danger lies.

If your GPS consistently brought you to the wrong place, but you thought it was the right place, do you not think that might be a problem? No matter how many people found it useful, it could be dangerously wrong in some cases.

My worry is precisely because people find it so useful to "look things up", paired with the fact that it has a tendency to wildly construct 'information' that feels true. It's a real, serious problem that people need to understand when using it like that.

Or how people are unimpressed with AI image generation, but at the same time - clearly can't tell in apart (people telling artist their art is AI generated because it looks too good or strange). Or we have AI powered V-Tubers interacting with live audience. And than I stop and think about that it's just have been a few years since AI started it's modern day breakthrough - what we see are some primitive cave drawings.