this post was submitted on 25 Jun 2023

255 points (98.1% liked)

Programmer Humor

19572 readers

1484 users here now

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

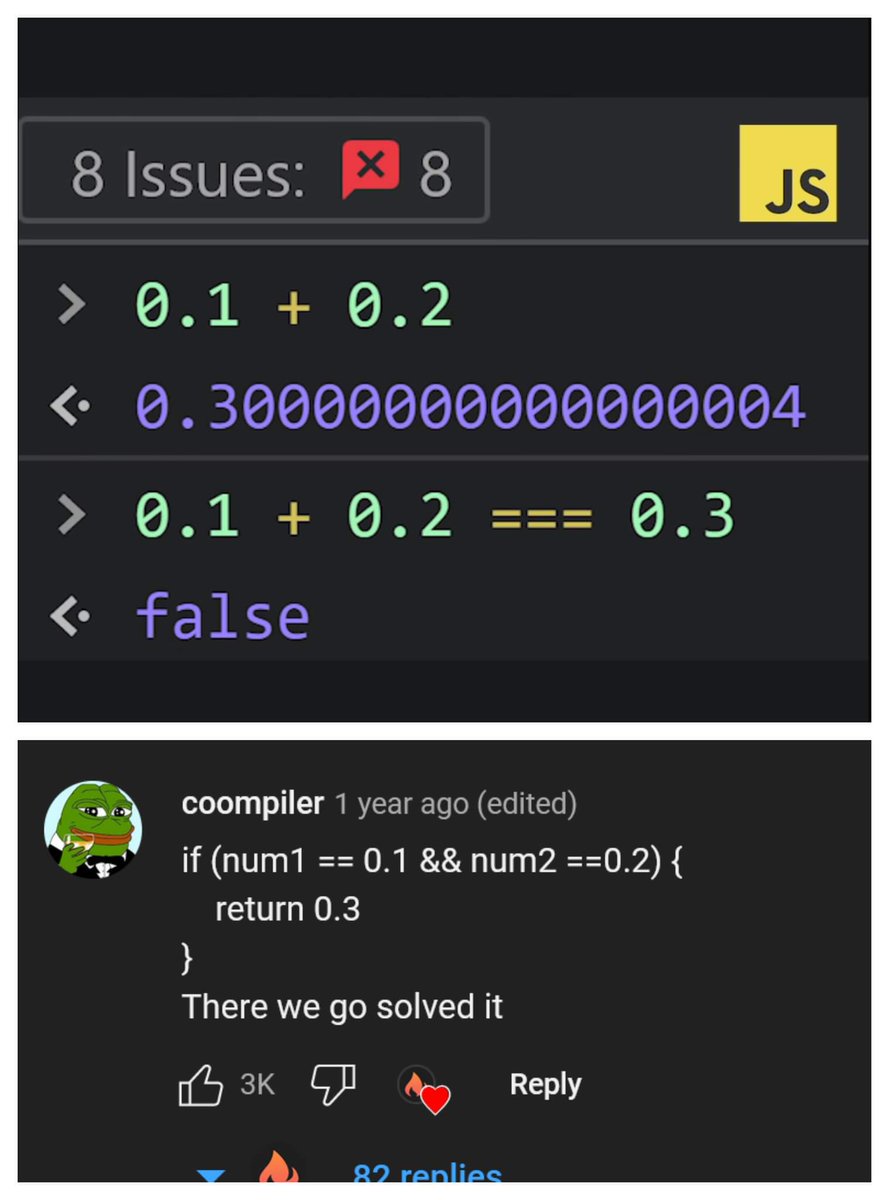

I know this is a humor subreddit and this is a joke, but this problem wasted a huge week of mine since I was dealing with absurdly small numbers in my simulations. Use fsum from math library in Python to solve this people.

The lesson here is that floating point numbers are not exact and that you should never do a straight comparison with them. Instead check to see if they are within some small tolerance of each other. In python that is done with

math.isclose(0.1 + 0.2, 0.3).Please don't try to approximate. Use the decimal module to represent numbers and everything will work as expected and it has a ton of other features you didn't know you needed.

https://docs.python.org/3/library/decimal.html#module-decimal

Decimal does come at a cost though, being slower than raw floats. When you don't need precision but do need performance then it is still valid to use floats. And quite often you don't need absolute precision for things.

Decimal is less precise than binary. It's just imprecise in ways that are less surprising to humans.

Be careful however, if you work with really large numbers this will absolutely tank your performance and eat up all your memory.

There's a reason floating point numbers exist. They are very good at what they do, at the cost of lower precision and being a bit more difficult to work with.

One of my lecturers mentioned a way they would get around this was to store all values as ints and then append a . two character before the final one.

Yeah, this works especially well for currencies (effectively doing all calculations in cents/pennies), as you do need perfect precision throughout the calculations, but the final results gets rounded to two-digit-precision anyways.

quite a horrible hack, most modern languages have decimal type that handles floating rounding. And if not, you should just use rounding functions to two digits with currency.

Not sure what financing applications you develop. But what you suggest wouldn't pass a code review in any financial-related project I saw.

Using integers for currency-related calculations and formatting the output is no dirty hack, it's industry standard because floating-point arithmetic is, on contemporary hardware, never precise (can't be, see https://en.wikipedia.org/wiki/IEEE_754 ) whereas integer arithmetic (or integers used to represent fixed-point arithmetic) always has the same level of precision across all the range it can represent. You typically don't want to round the numbers you work with, you need to round the result ;-) .

Phew. Sometimes I read things and think I'm going crazy. I work in ERP/accounting software and was sure the monetary data type I've been using was backed by integers, but the post you're replying to had me second guessing myself...

Had to think about it, but yeah, I guess, you can't do division or non-integer multiplication with integer cents, as standard integer math always rounds downwards and it forces you to round after every step.

You could convert to a float for the division/multiplication and you do get more efficient addition/subtraction as well as simpler de-/serialization, but in most situations, it's probably less trouble to use decimals.

You do not want to use floats for any part of calculating money. The larger the value the larger the error in them - not a trait you want when dealing with money. Fixed point numbers/decimals/big ints are much better for this, if you want greater than cent precision, treat the values as fractions of a cent (aka move the arbitrary decimal over one more place or however many you need for your application). The maths is the same no matter where you place the decimal point in it.

Fixed point notation. Before floats were invented, that was the standard way of doing it. You needed to keep your equation within certain boundaries.

Good humor is based on reality