Software Gore

Welcome to /c/SoftwareGore!

This is a community where you can poke fun at nasty software. This community is your go-to destination to look at the most cringe-worthy and facepalm-inducing moments of software gone wrong. Whether it's a user interface that defies all logic, a crash that leaves you in disbelief, silly bugs or glitches that make you go crazy, or an error message that feels like it was written by an unpaid intern, this is the place to see them all!

Remember to read the rules before you make a post or comment!

Community Rules - Click to expand

These rules are subject to change at any time with or without prior notice. (last updated: 7th December 2023 - Introduction of Rule 11 with one sub-rule prohibiting posting of AI content)

- This community is a part of the Lemmy.world instance. You must follow its Code of Conduct (https://mastodon.world/about).

- Please keep all discussions in English. This makes communication and moderation much easier.

- Only post content that's appropriate to this community. Inappropriate posts will be removed.

- NSFW content of any kind is not allowed in this community.

- Do not create duplicate posts or comments. Such duplicated content will be removed. This also includes spamming.

- Do not repost media that has already been posted in the last 30 days. Such reposts will be deleted. Non-original content and reposts from external websites are allowed.

- Absolutely no discussion regarding politics are allowed. There are plenty of other places to voice your opinions, but fights regarding your political opinion is the last thing needed in this community.

- Keep all discussions civil and lighthearted.

- Do not promote harmful activities.

- Don't be a bigot.

- Hate speech, harassment or discrimination based on one's race, ethnicity, gender, sexuality, religion, beliefs or any other identity is strictly disallowed. Everyone is welcome and encouraged to discuss in this community.

- The moderators retain the right to remove any post or comment and ban users/bots that do not necessarily violate these rules if deemed necessary.

- At last, use common sense. If you think you shouldn't say something to a person in real life, then don't say it here.

- Community specific rules:

- Posts that contain any AI-related content as the main focus (for example: AI “hallucinations”, repeated words or phrases, different than expected responses, etc.) will be removed. (polled)

You should also check out these awesome communities!

- Tech Support: For all your tech support needs! (partnered)

- Hardware Gore: Same as Software Gore, but for broken hardware.

- DiWHY - Questioning why some things exist...

- Perfect Fit: For things that perfectly and satisfyingly fit into each other!

view the rest of the comments

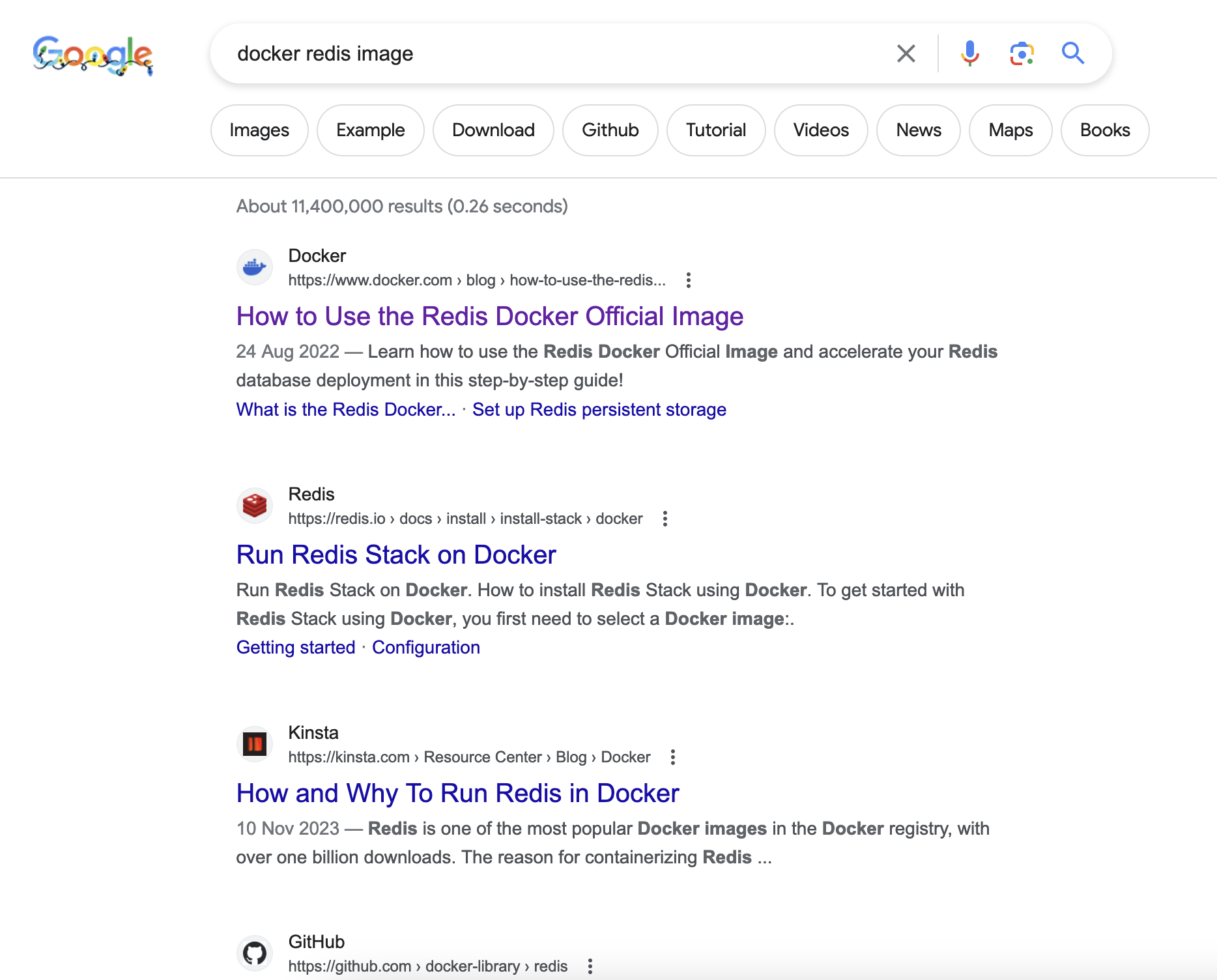

These things aren’t smart. They don’t know the context of your search. It doesn’t ask “why are they looking for [keyword]”, it just matches to results that score strongly for the keyword. Yeah, blogs tend to suck up the traffic but the results you got are 1 step away from what you were actually looking for but didn’t include in the search prompt.

The real problem is that the internet is being littered by AI generated articles and blog posts. Optimizing your SEO has never been easier because how quickly it can analyse key words / trends etc. This process used to be extremely time consuming- not anymore.

So now we have content that is generated for the sole purpose of getting traffic and serve no real value, competing with actual genuine sources. That's why we're seeing a shift in our search results.

Ideally search engines now have to adapt and learn to differentiate between "real" and AI generated content. but just like global warming I fear we've gone beyond the point of no return, and must suffer the consequences.

@Dagamant

@cozy_agent

There's still hope, we just need to actively sabotage these SEO hubs. The easiest and safest one would be an SEO site flagger plugin that would hide links to sites predominately featuring SEO garbage.

I hope so - but I have my doubts. In many cases it's already impossible for a human to differentiate between A.I generated- and real content. This applies to both image and text.

While I'm on the subject; data poisoning is an interesting idea. If what the researchers behind the tool is true, it may change how copyright laws work on visual content.