I thought it was coincidental that behind the Bastards was covering this subject in the show this week, then I saw the author. It's Robert Evans, the host. I guess if you want more information for this, listen to this week's podcast of behind the Bastards, I guess.

Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

In the first episode the discription said it was an adaptation of this rolling stone article in case you weren't aware.

I didn't know that, thanks.

Me too I thought I was tripping, love seeing Robert get recognized

. . . NVIDIA in-house counsel Nikki Pope: In a panel on “governing” AI risk, she cited internal research that showed consumers trusted brands less when they used AI.

This gels with research published last December that found only around 25 percent of customers trust decisions made by AI over those made by people. One might think an executive with access to this data might not want to admit to using a product that would make people trust them less.

Indeed.

25% is an abnormally large number considering the current techonological inability to the same thing as a human could. In my experience current "AI" is mostly useful for very specific tasks with very narrow guidelines.

What's interesting is the research where when humans don't know that the output is generated by an AI, they prefer and trust it more than output from actual humans.

Among the early adopter set, people couldn’t wait for the chance to hand over more of their agency to a glorified chatbot.

Spot on.

Anywhere speculative investment is involved there are cult like patterns. If your investors don't believe that your product is going to revolutionize its field you're not going to get the kind of funding these startups want.

…an AI-assisted fleshlight for premature ejaculators.

Sounds straight out of idiocracy lol

I was a professional tech futurist and while I normally made more like ~5yr forecasts, around fifteen years ago I wrote a story for fun that was a further out prediction structured around a narrative taking place in the early 2030s.

In it, in addition to there being AR computing interfaces and self driving cars, the key tech advance was AI having been developed around a decade earlier - outside of these three things most of the world was the same.

By this time the AI was shoved into everything from toasters to musical instruments, and the story followed a new class of job that was solely focused on getting AI to do what people wanted by using natural language (what we'd now call a "prompt engineer").

The main antagonists were a modern resurgence of the Luddite movement which had grown in popularity as AI had grown.

The story even had an AI powered dildo.

It's been a pretty fucking surreal past few years watching what's been taking place.

Lol wanna share? Colored me interested

I am so excited to order my Digital Monk who will free me from the drudgery of having to believe in things. Much like a dishwasher washes dishes so you do not have to, the Digital Monk believes in things so you do not have to.

They will be able to believe all commercials and politicians for me, freeing me from the heavy labor of Critical Thinking.

I love the tech but have much the same feelings. AI maybe improve the world eventually, but I predict a painful future in the intervening time. I hope investors turn sooner than later to slow this train but we’ll see. Lot of big players betting the farm on AI, to the point where they’ll do everything to see it through.

Every advance in technology (see all the Luddites in history) have been accompanied with a wake of pain.

What about toilet technology?

Especially toilets. They know what they did...

Imagine having to raise an entire city 6 feet so there's room to install a sewage system.

This is probably more of a failing of infrastructure and planning than technology. But I think if we only handle advances in technology as a thing on paper and not a thing in society used by people, then we miss an important, but simple point. Technologies are used by people and they is the only way they can change society.

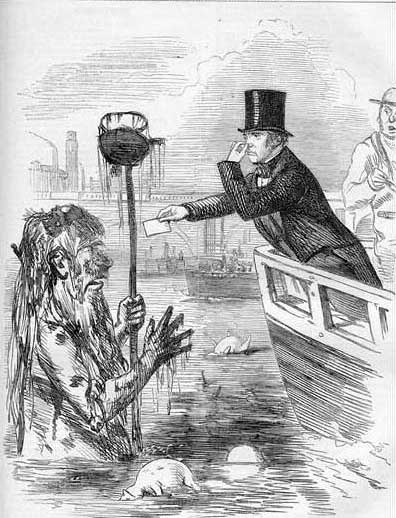

Any case, toilets ruined London for a couple of decades:

As the population of Britain increased during the 19th century, the number of toilets did not match this expansion. In overcrowded cities, such as London and Manchester, up to 100 people might share a single toilet. Sewage, therefore, spilled into the streets and the rivers.

This found its way back into the drinking water supply (which was brown when it came out of the pipes) and was further polluted by chemicals, horse manure and dead animals; as a result, tens of thousands died of water-borne disease, especially during the cholera outbreaks of the 1830s and 1850s.

In 1848, the government decreed that every new house should have a water-closet (WC) or ash-pit privy. "Night soil men" were engaged to empty the ash pits. However, after a particularly hot summer in 1858, when rotting sewage resulted in "the great stink (pictured right in a cartoon of the day)", the government commissioned the building of a system of sewers in London; construction was completed in 1865. At last, deaths from cholera, typhoid and other waterborne diseases dropped spectacularly.

The Great Stink only arises because of the development of a sewer system that piped all the sewage to the Thames. And it didn't stop with the stink:

Despite Bazalgette’s ingenuity, the system still dumped tons of raw sewage into the Thames - sometimes with unfortunate results. The death toll from the sinking of the pleasure boat Princess Alice in 1878 would certainly have been smaller if it had sunk elsewhere on the Thames. As it was, it went down close to one of the main sewage outfalls. Approximately 640 passengers died, many poisoned rather than drowned. Horror at the deaths was instrumental in the building of a series of riverside sewage treatment plants. [Science Museum]

So that's just one example of toilet technology causing a mess. I bet there are others such as the need for an 'S' pipe. But ultimately, technological improvements require a little foresight, insight, feedback and a lot of social power.

Haha wow I had no idea, just was being a smart ass and got a fun fact out of it. Thanks for sharing!

Not every new piece of technology is actually an advancement. You have an extreme case of selection bias in your assessment.

Name 5 that did not have sweeping adverse consequences, with accompanying sources. I will even accept Wikipedia pages if they have attributions. Make sure they are major ones that really shaped the course of human existence moving forward from their introduction.

Behind the bastards is doing a 2 part podcast on this too. Those AI cult mofos are fucking crazy..

It's one of the terrible hype trains again... However, I wonder what makes him think that humans are something clearly more than a model that gathers data through the senses and reacts to external stimuli based on the current model. I think that's special pleading.

I've seen a lot of reaction to AI that smacks of some kind of species-level narcissism, IMO. Lots of people have grown up being told how special humans were and how there were certain classes of things that were "uniquely human" that no machine could ever do, and now they're being confronted with the notion that that's just not the case. The psychological impact of AI could be just as distressing as the economic impact, it's going to be some interesting times ahead.

None of the AI technology we have now even comes close to human intelligence

And yet it's writing poetry and painting pictures. That makes it worse, doesn't it? Turns out you don't have to be very intelligent to do those things.

Yeah, shitty poetry and entirely unoriginal artwork. I don't know what your deal is but there's a hell of a lot more to consciousness and the human brain than that and current AI tech doesn't even come close to it.

Better art and poetry than most humans can produce.

It's been a wild ride of people thinking things can't be the case which then turn out to be the case.

For example, this neat work just out of NYU: https://www.nyu.edu/about/news-publications/news/2024/february/ai-learns-through-the-eyes-and-ears-of-a-child.html

I’m not sure how you get this from the article, though. Evans has no doubt it’s possible; like anyone with any knowledge of the state of AI he also knows that’s really fucking far away and just science fiction today. On the other hand, if you’re going to reduce things to the absurd level comment chain OP did, I suppose the future is now because judicial AI is just as racist as cops.

I'm not talking about the article specifically, just a general class of reaction I've seen.

"What we call AI lacks agency, the ability to make dynamic decisions of its own accord, choices that are “not purely reactive, not entirely determined by environmental conditions.” "

That's from the article and I referred to that.

So are you suggesting that humans “[lack] agency [and] the ability to make dynamic decisions?” Your point is that humans are just AI and, if we’re going from this quote, we can’t have agency if we are the same.

I'm not saying that humans are just AI, I'm just saying that there's no fundamental difference in the sense that we also respond to stimuli.. we don't have free will.

That’s fair. With that line of logic, the author had to say what he said so there’s no value behind criticizing him. Granted you had to criticize him because you have no free will either. The conversation is completely meaningless because all of this is just preprogrammed action.

Depends on how you define meaning. I find meaning in experiencing the life. It may be predetermined or have random elements in it but the experience is unique to me.

Anyway, given all we know about us and the universe I haven't heard a coherent proposal of how free will could work. So, until there's good evidence to convince me otherwise .. I can't help but believe it doesn't exist.

Right! Without free will the only meaning you have is whatever you were preordained to have. Even your sense of meaning is just a predefined firing of neurons set into motion when it all began. This conversation, my response to you, your response to me, it’s all just something we have no control over unless our brains were wired back when to believe that infinitely small sub(infinite)atomic particles colliding is any form of meaning.

So, until there’s good evidence to convince me otherwise … I can’t help but believe it doesn’t exist.

Is it other people’s jobs to bring this evidence to you?

Personally, I think that's oversimplification to the level of absurdity, for both AI and humans.

That description can easily be applied to insects and animals as well.

Humans are animals, so that seems fine to me.

Have read the article.

Maybe call me ignorant but as someone from Eastern part of the world, sometimes I wonder why would these people worry when all of these AI stuff are still prompted from human input, in a sense that We are the one who creates them and dictates its actions. All in all they're just closed loop automata that happens to have better feedback input compared to your ordinary Closed loop system machines.

Maybe these people worried because these (regular people) don't know how these things works or simply they don't have or lack of self control in first place which what makes them feels like having no control about what happening.

I understand the danger of AI too, but those who prompted them also human too, in which it is just human nature by itself.

While this is true in aggregate, consider Elon's Grok which then turned around and recognized trans women as women, black crime stats as nuanced, and the "woke mind virus" as valuable social progress.

This was supposed to be his no holds barred free speech AI and rather than censor itself it told his paying users that they were fucking morons.

Or Gab's Adolf Hitler AI which, when asked by a user if Jews were vermin, said they were disgusting for having suggested such a thing.

So yes, AI is a reflection of human nature, but it isn't necessarily an easily controlled or shaped reflection of that.

Though personally I'm not nearly as concerned about that being the continuing case as most people it seems. I'm not afraid of a world in which there's greater intelligence and wisdom (human or otherwise) but one in which there is less.

AI is a reflection of human nature, but it isn’t necessarily an easily controlled or shaped reflection of that.

This partly true especially models that deployed on public and uses large samples gathered from large amount of peoples. Now the parts that we can't control is if the model is trained with skewed dataset that benefits certain outcomes.

It depends on which stage of training. As the recent Anthropic research showed, fine tuning out behavior isn't so easy.

And at the pretrained layer you really can't get any halfway decent results with limited data sets, so you'd only be able to try to bias it at the fine tuned layer with biased sourcing, but then per the Anthropic findings (and the real world cases I mentioned above) you are only biasing a thin veneer over the pretrained layer.

This is not some "computers will outpace us" Terminator shit. Algorithms are human dictated. We are the sole architect of our demise, not something else.

This is the best summary I could come up with:

I was watching a video of a keynote speech at the Consumer Electronics Show for the Rabbit R1, an AI gadget that promises to act as a sort of personal assistant, when a feeling of doom took hold of me.

Specifically, about a term first defined by psychologist Robert Lifton in his early writing on cult dynamics: “voluntary self-surrender.” This is what happens when people hand over their agency and the power to make decisions about their own lives to a guru.

At Davos, just days ago, he was much more subdued, saying, “I don’t think anybody agrees anymore what AGI means.” A consummate businessman, Altman is happy to lean into that old-time religion when he wants to gin up buzz in the media, but among his fellow plutocrats, he treats AI like any other profitable technology.

As I listened to PR people try to sell me on an AI-powered fake vagina, I thought back to Andreessen’s claims that AI will fix car crashes and pandemics and myriad other terrors.

In an article published by Frontiers in Ecology and Evolution, a research journal, Dr. Andreas Roli and colleagues argue that “AGI is not achievable in the current algorithmic frame of AI research.” One point they make is that intelligent organisms can both want things and improvise, capabilities no model yet extant has generated.

What we call AI lacks agency, the ability to make dynamic decisions of its own accord, choices that are “not purely reactive, not entirely determined by environmental conditions.” Midjourney can read a prompt and return with art it calculates will fit the criteria.

The original article contains 3,929 words, the summary contains 266 words. Saved 93%. I'm a bot and I'm open source!

Hype cycles are nothing new! Way back in the 1800s they used to have world tech fairs as well which were full of inventions that you’d think were full of shit or utterly dystopian. But adoption mostly depends on the masses, and if they’re not going to jive with something, then it doesn’t matter how many nerds are into it. XR/AR is a good example for now, but maybe that’ll change as the form factor of the tech improves.

I think the main thing that comes out of the AI hype might be digital assistants which know you well enough to assist you like a real assistant, or can do easy but timesink tasks. ChatGPT-based assistants, Cortana, Siri, Alexa are not flexible enough to replace to an actual executive assistant, for example. Any current digital assistant requires a lot of hand holding.

For people who can’t shell out an executive assistant salary, but need one (almost everyone who works), this will be awesome. For people who can afford an executive assistant, their life is complex enough that they’ll assign the EA to something else.